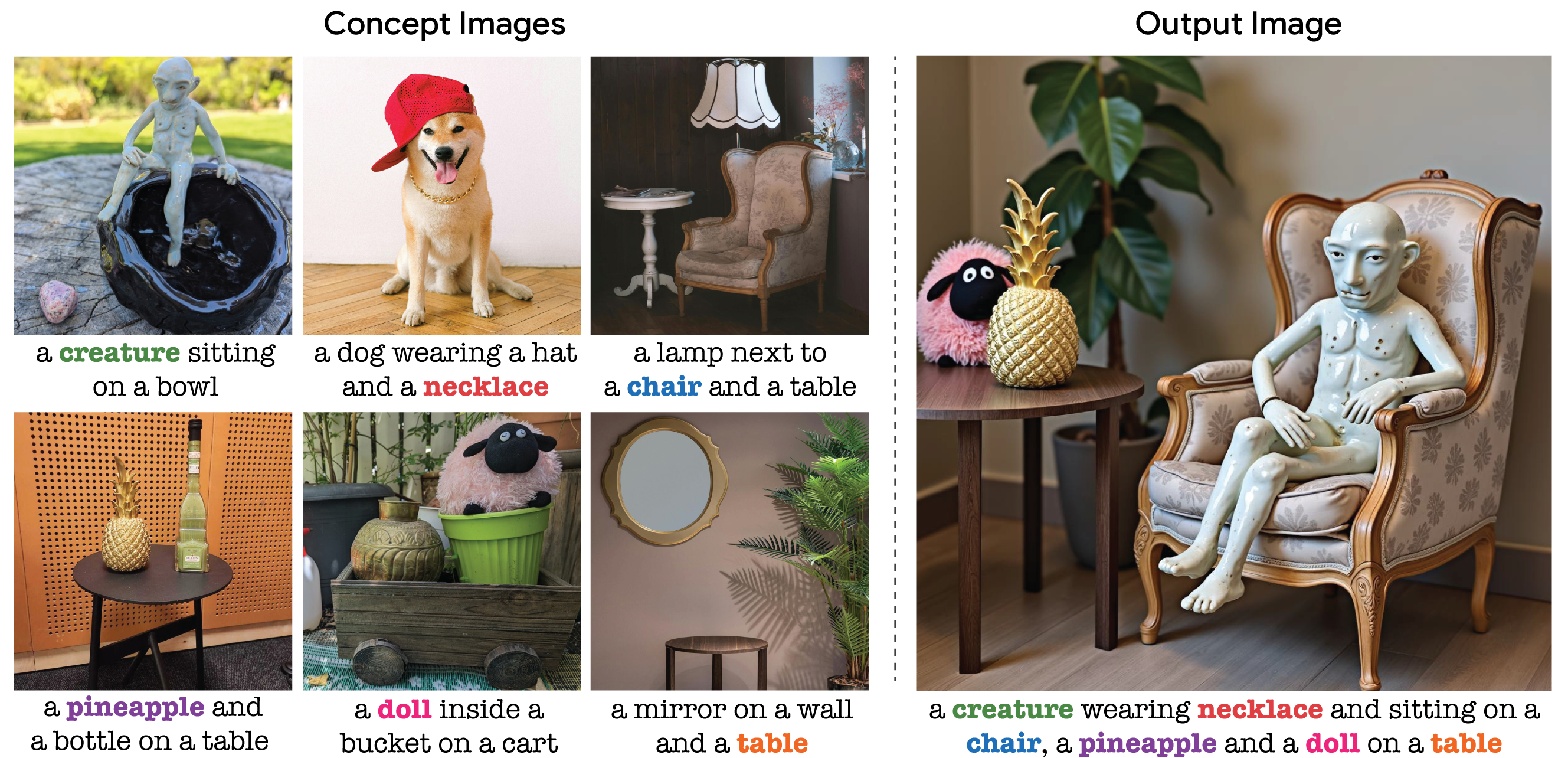

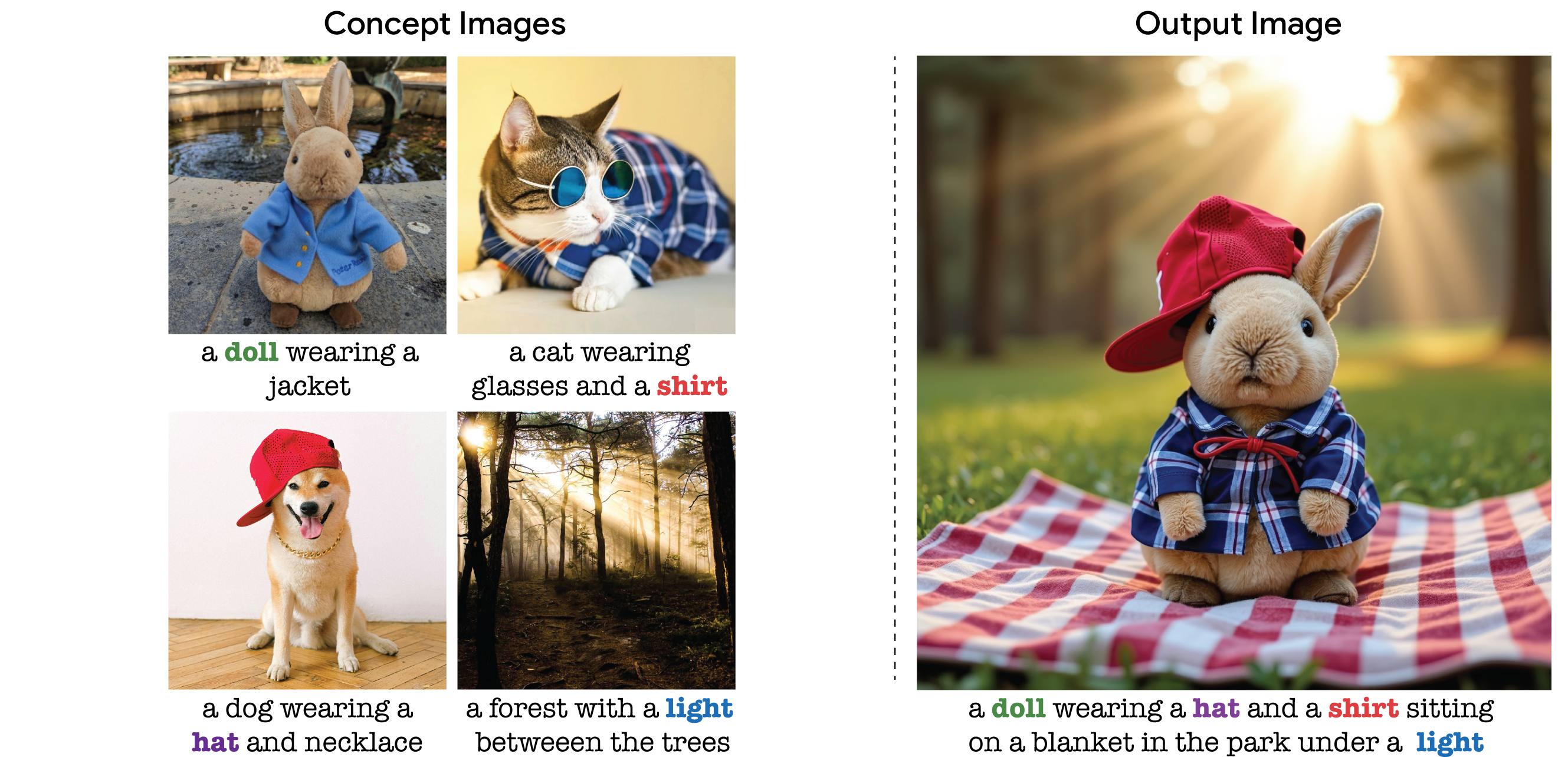

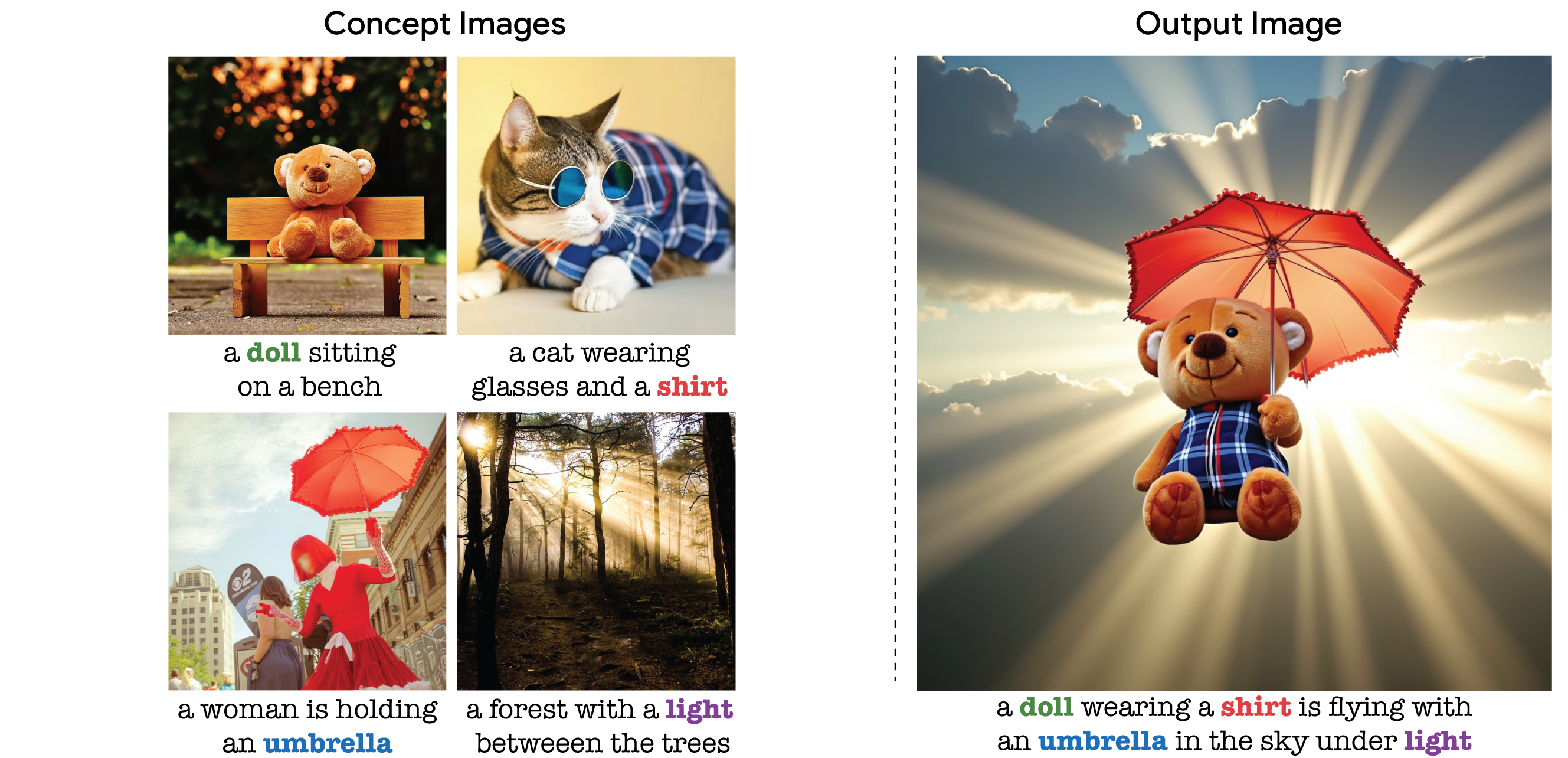

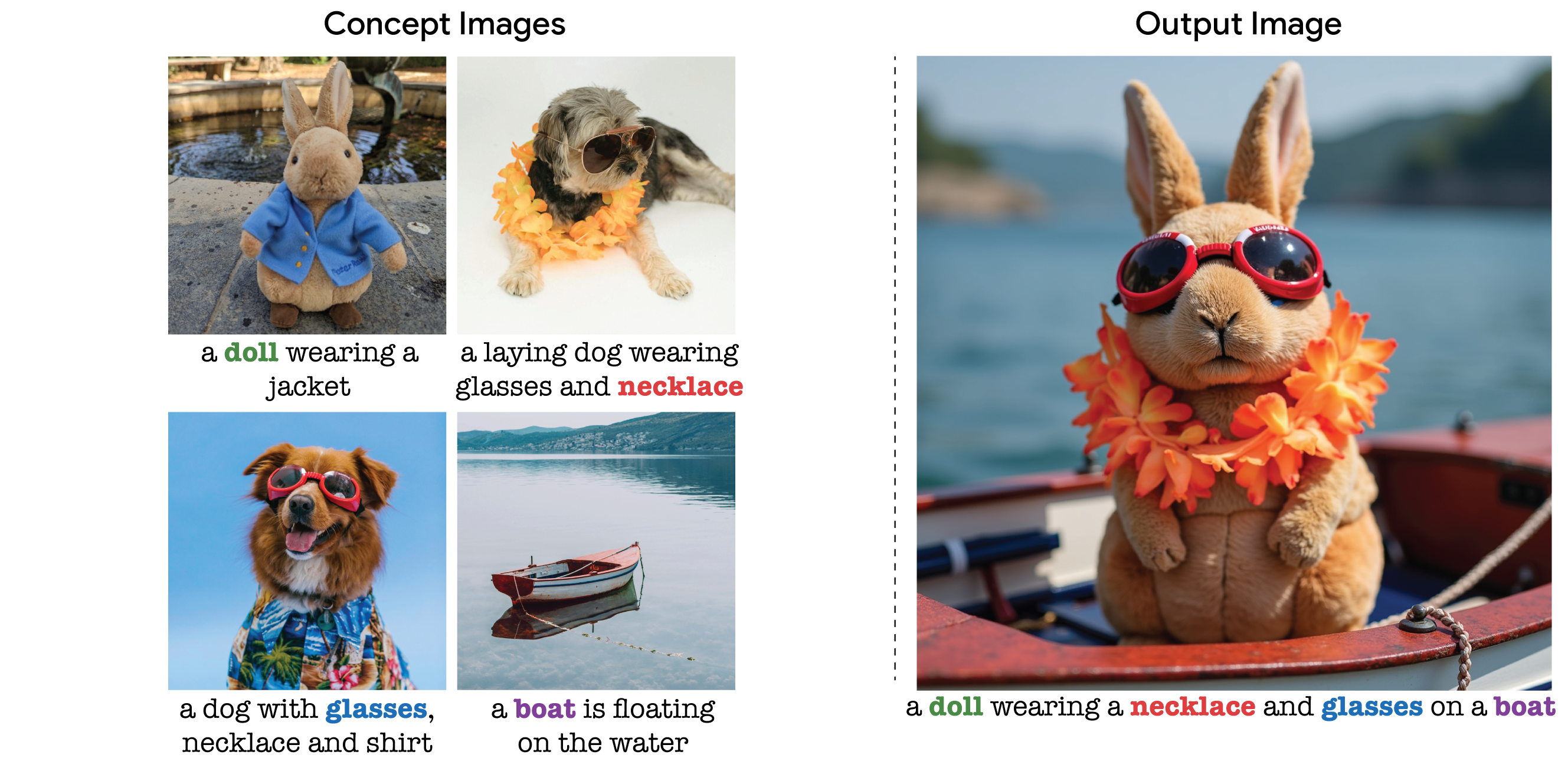

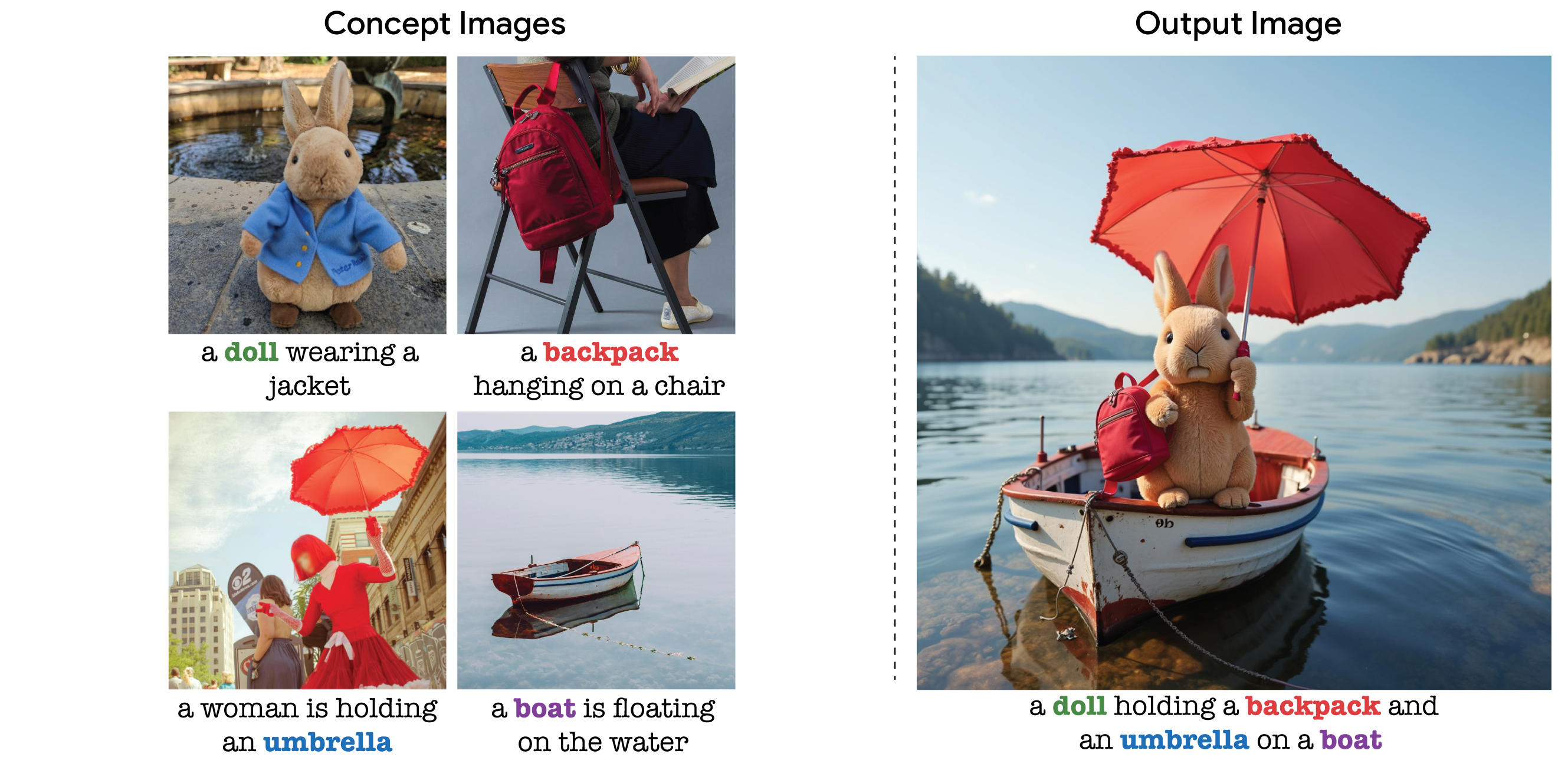

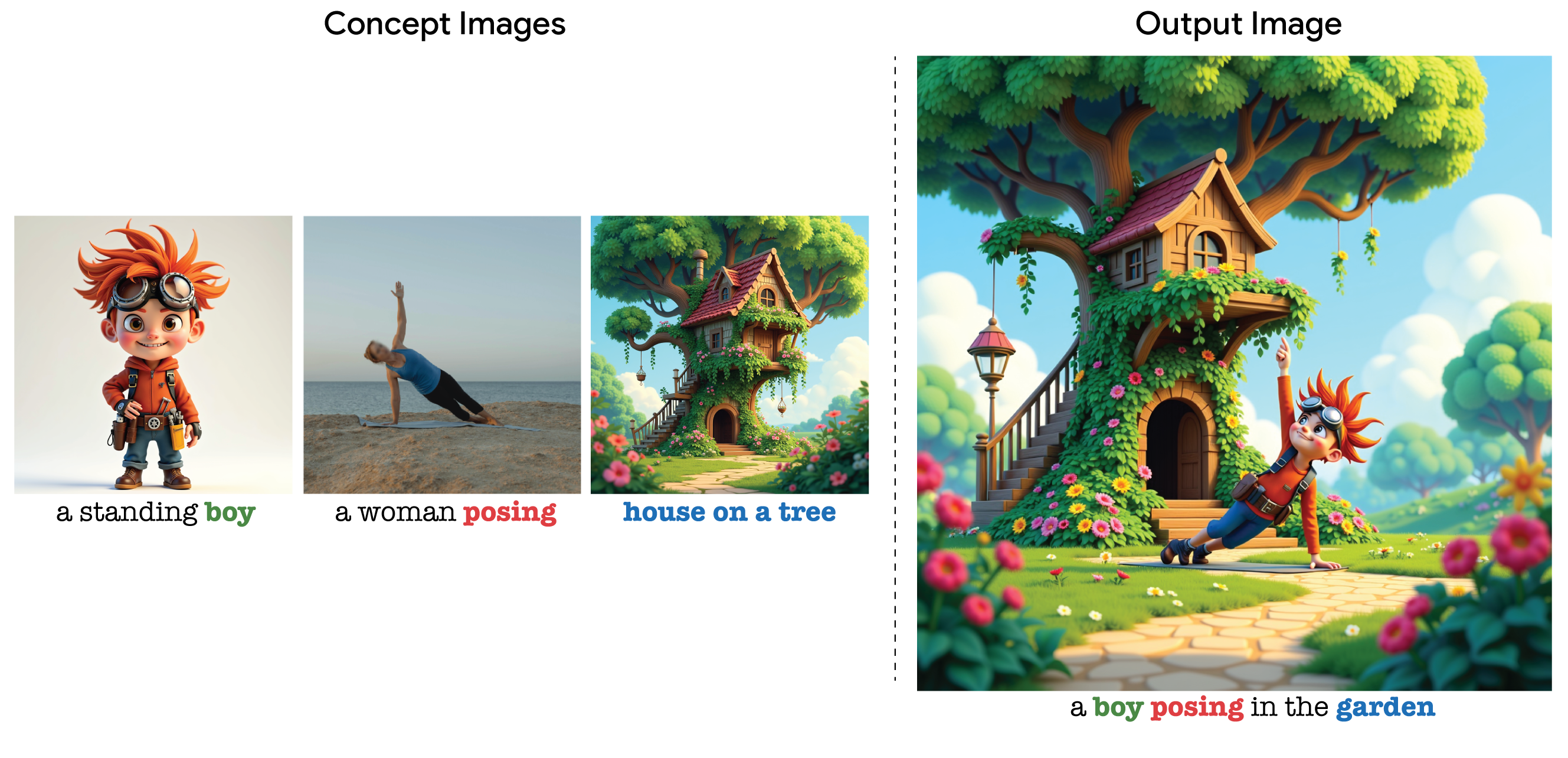

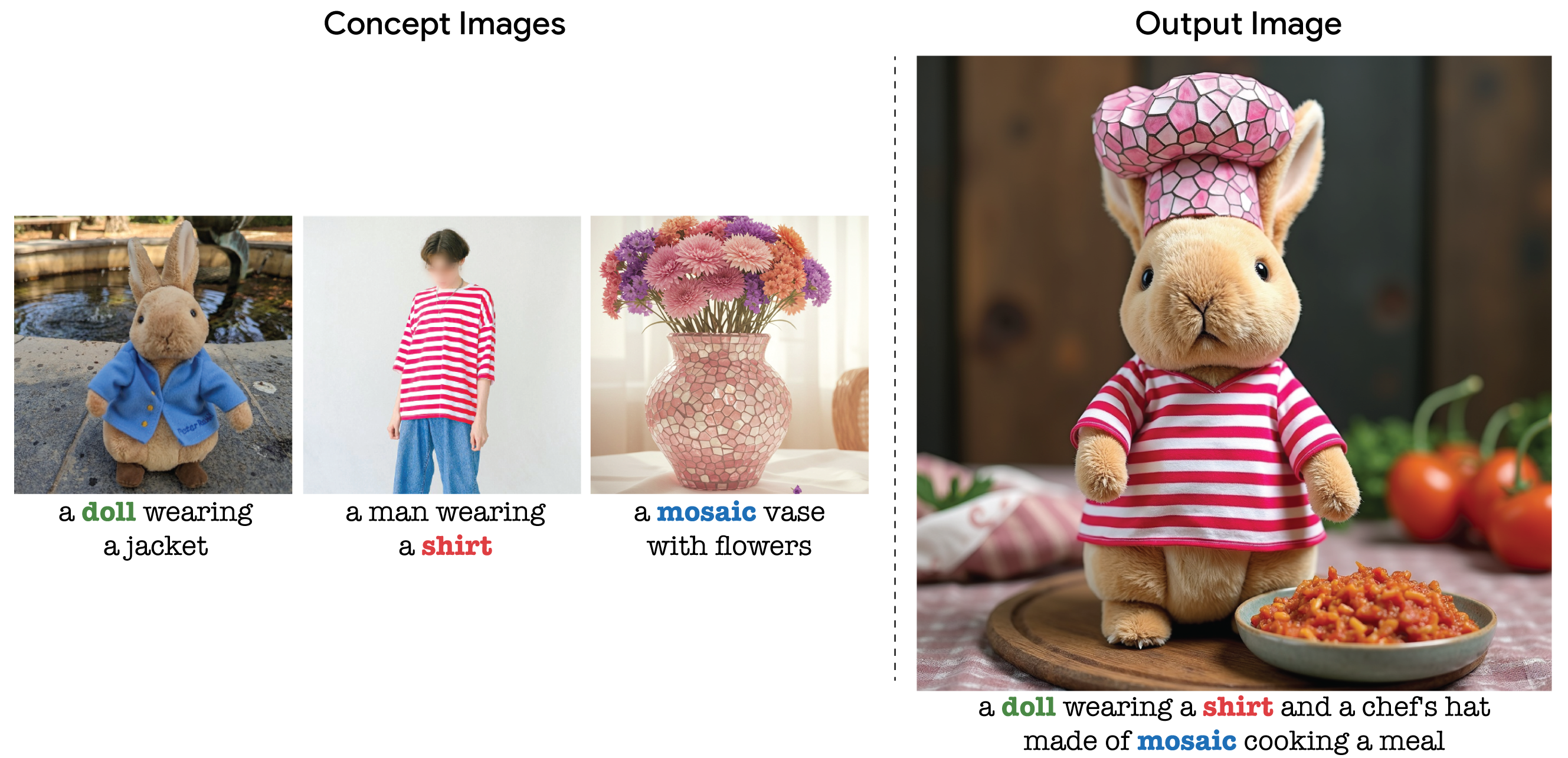

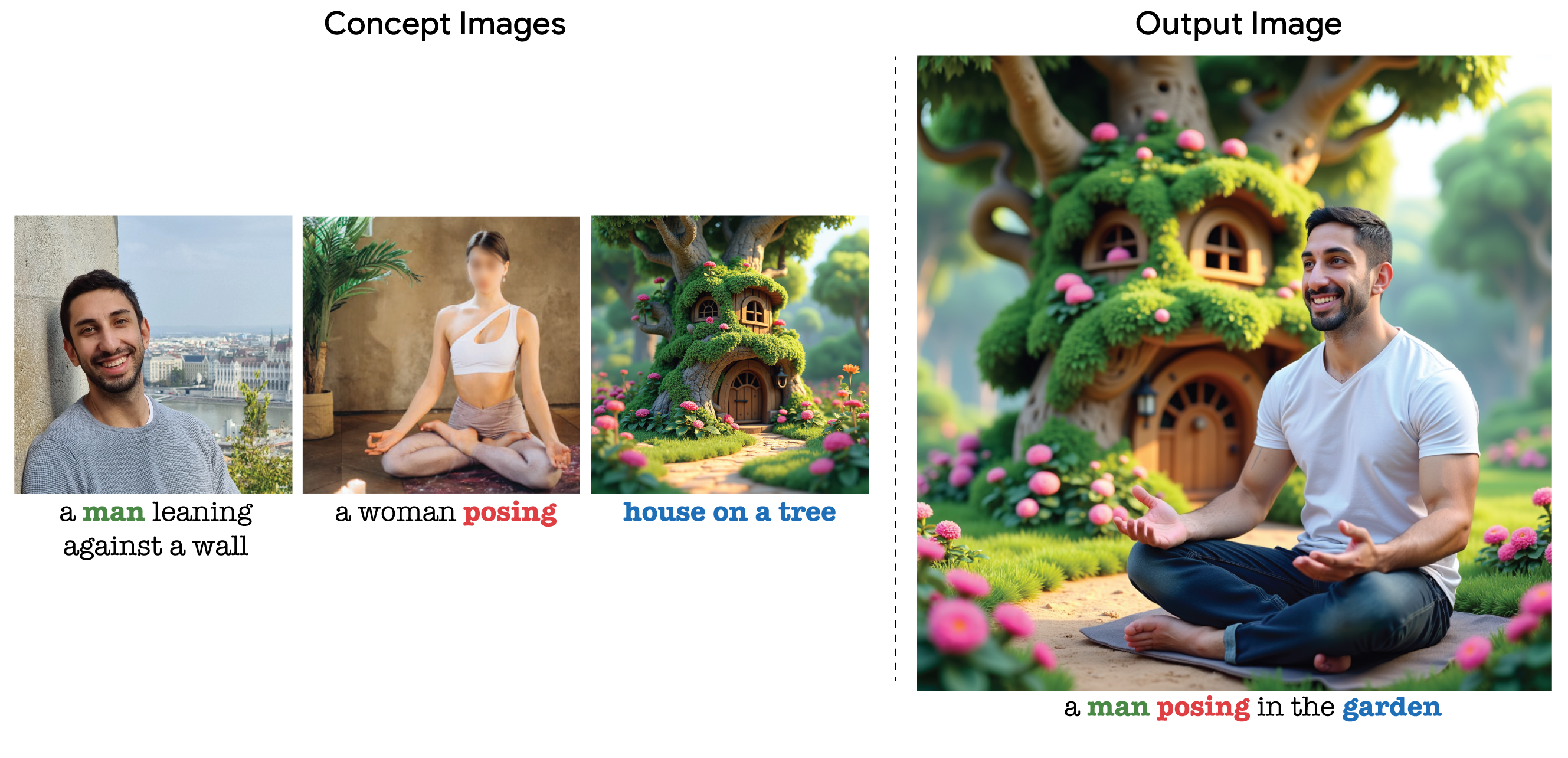

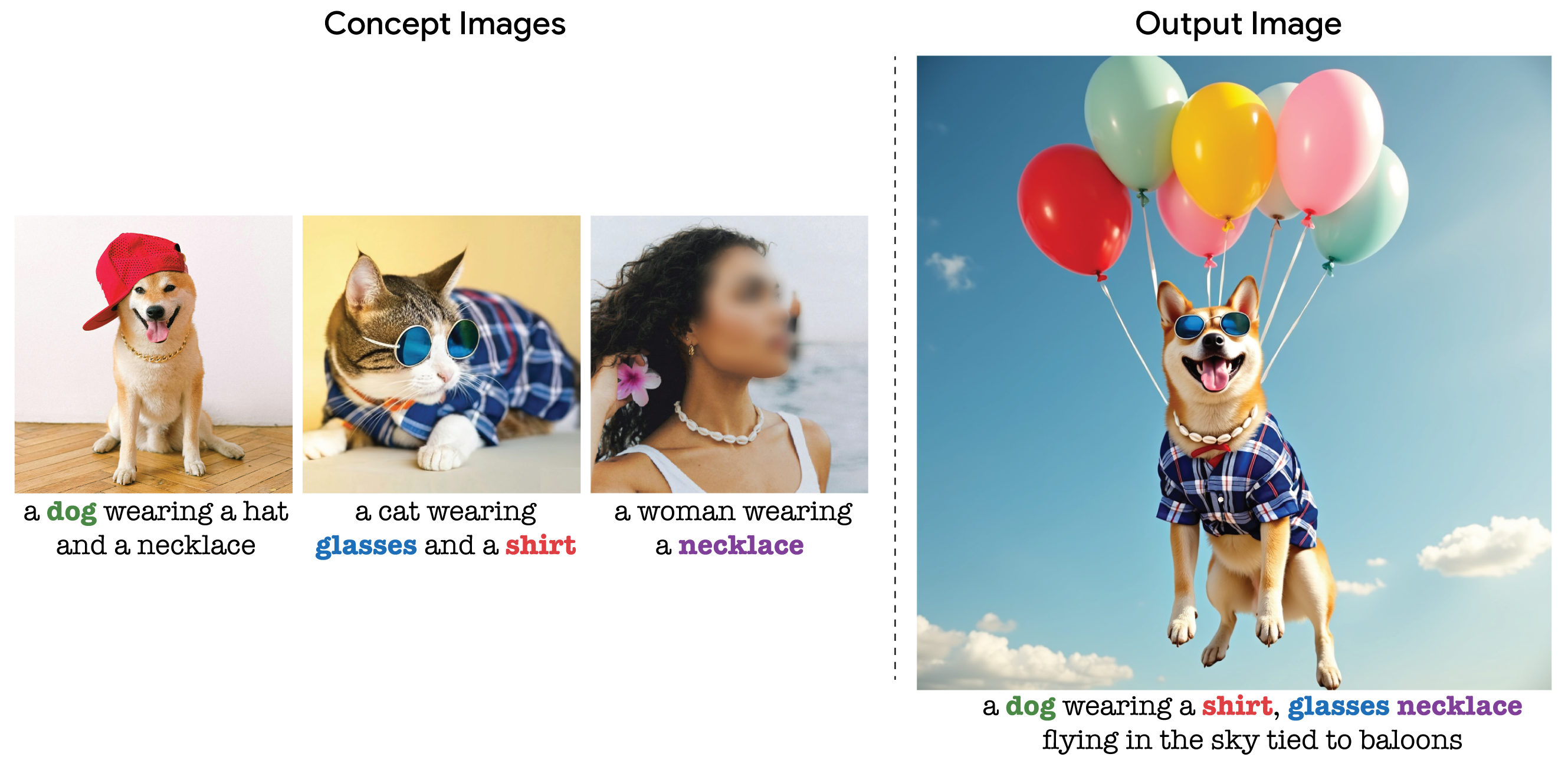

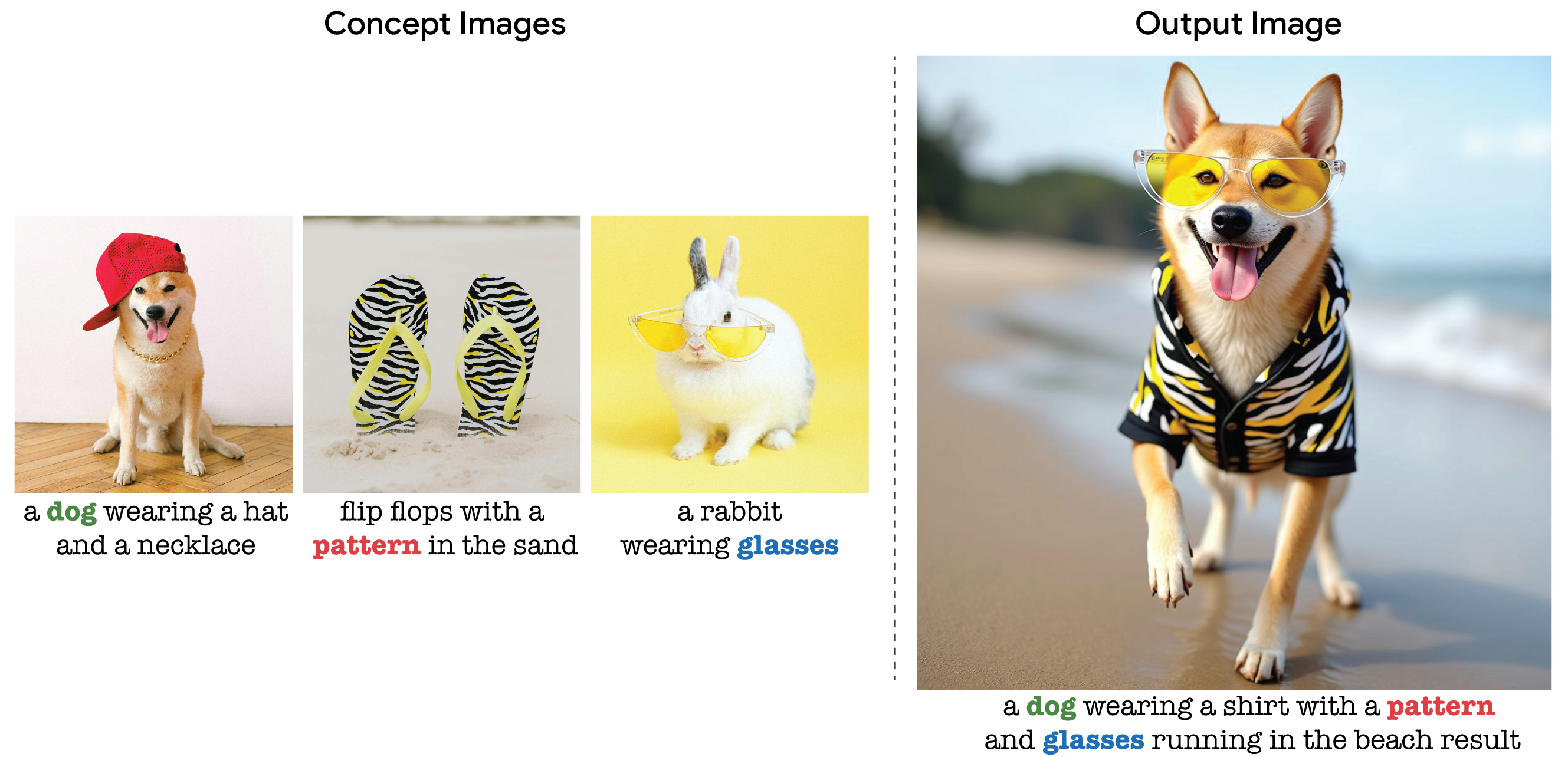

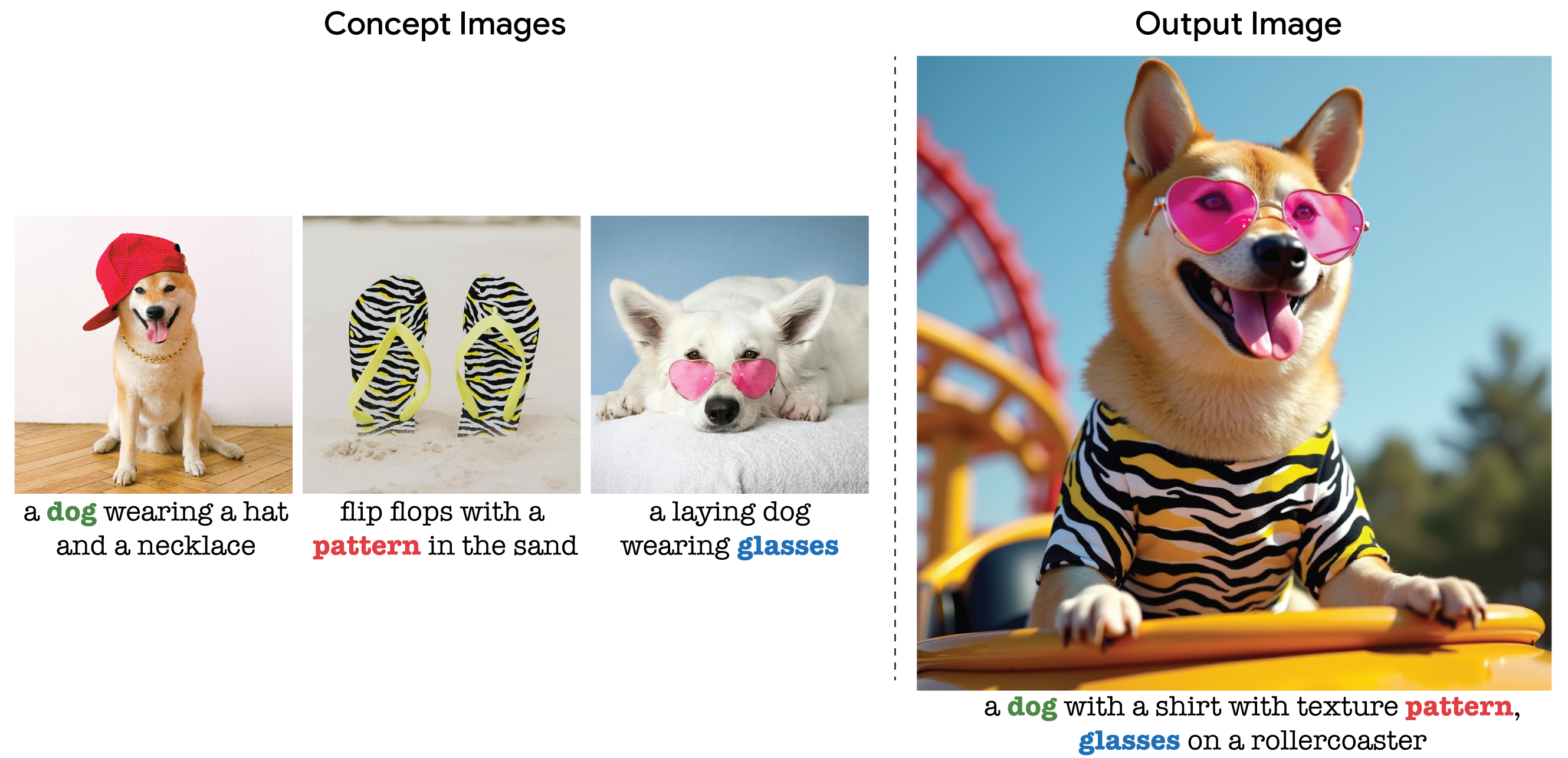

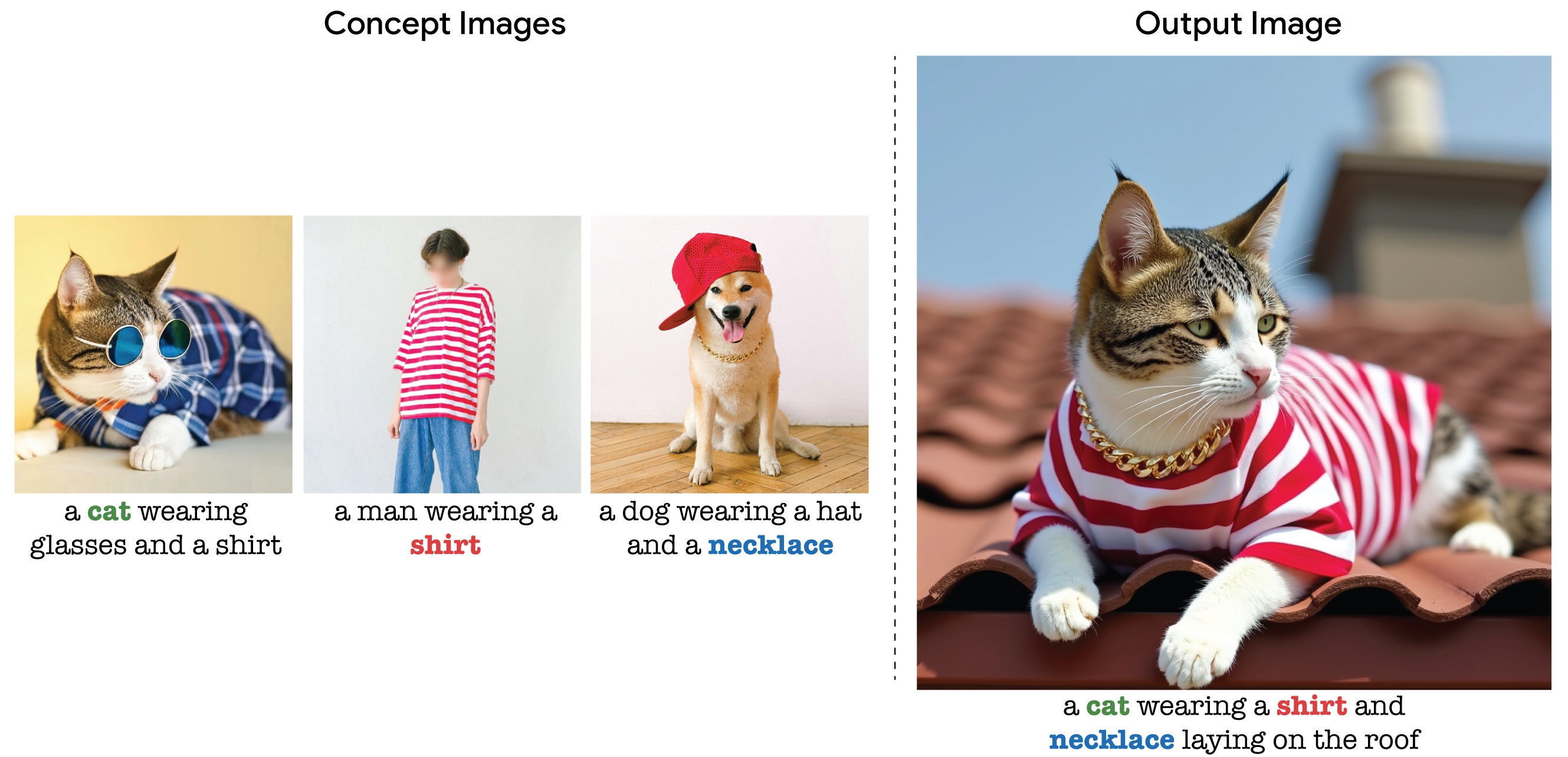

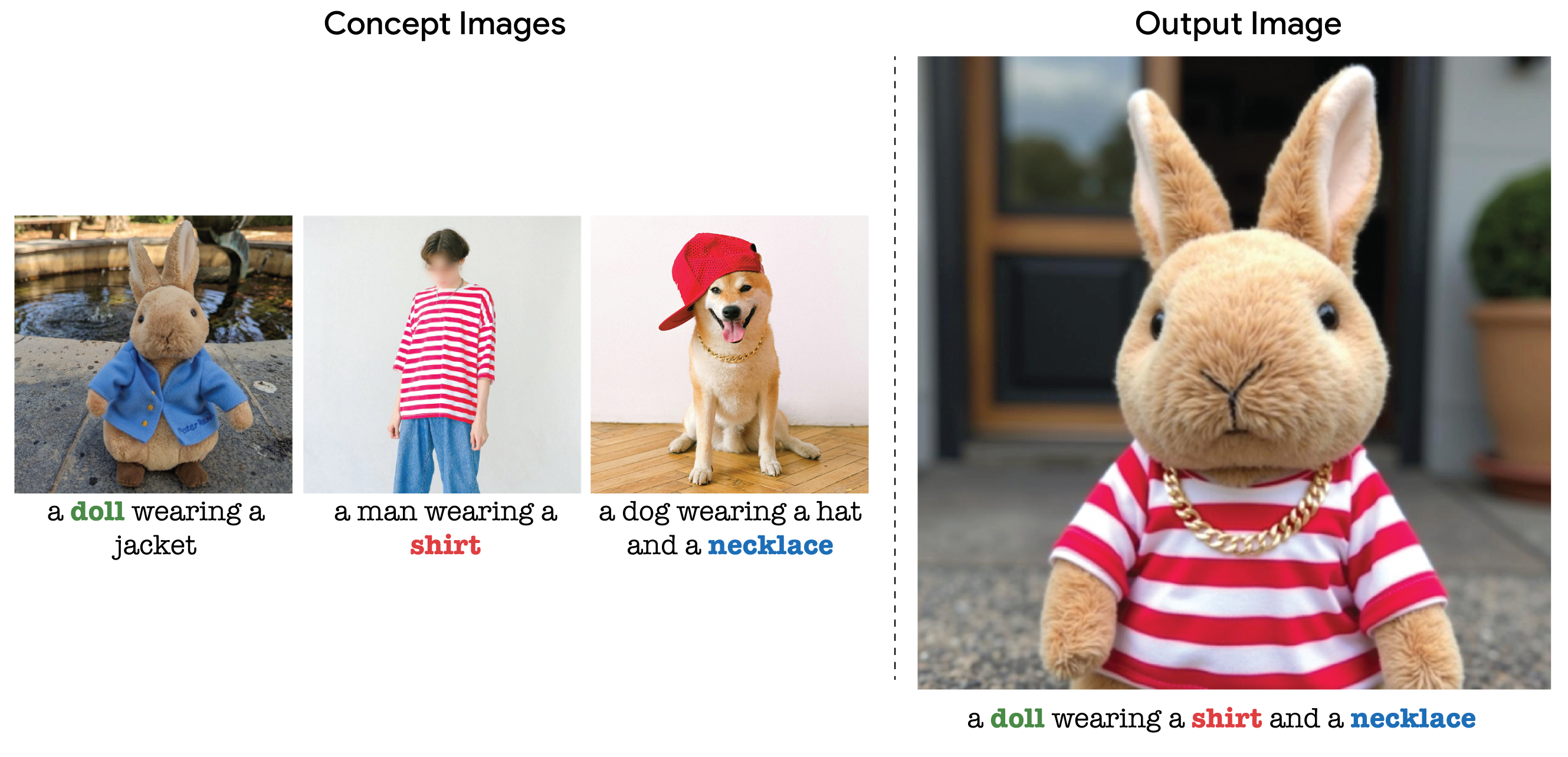

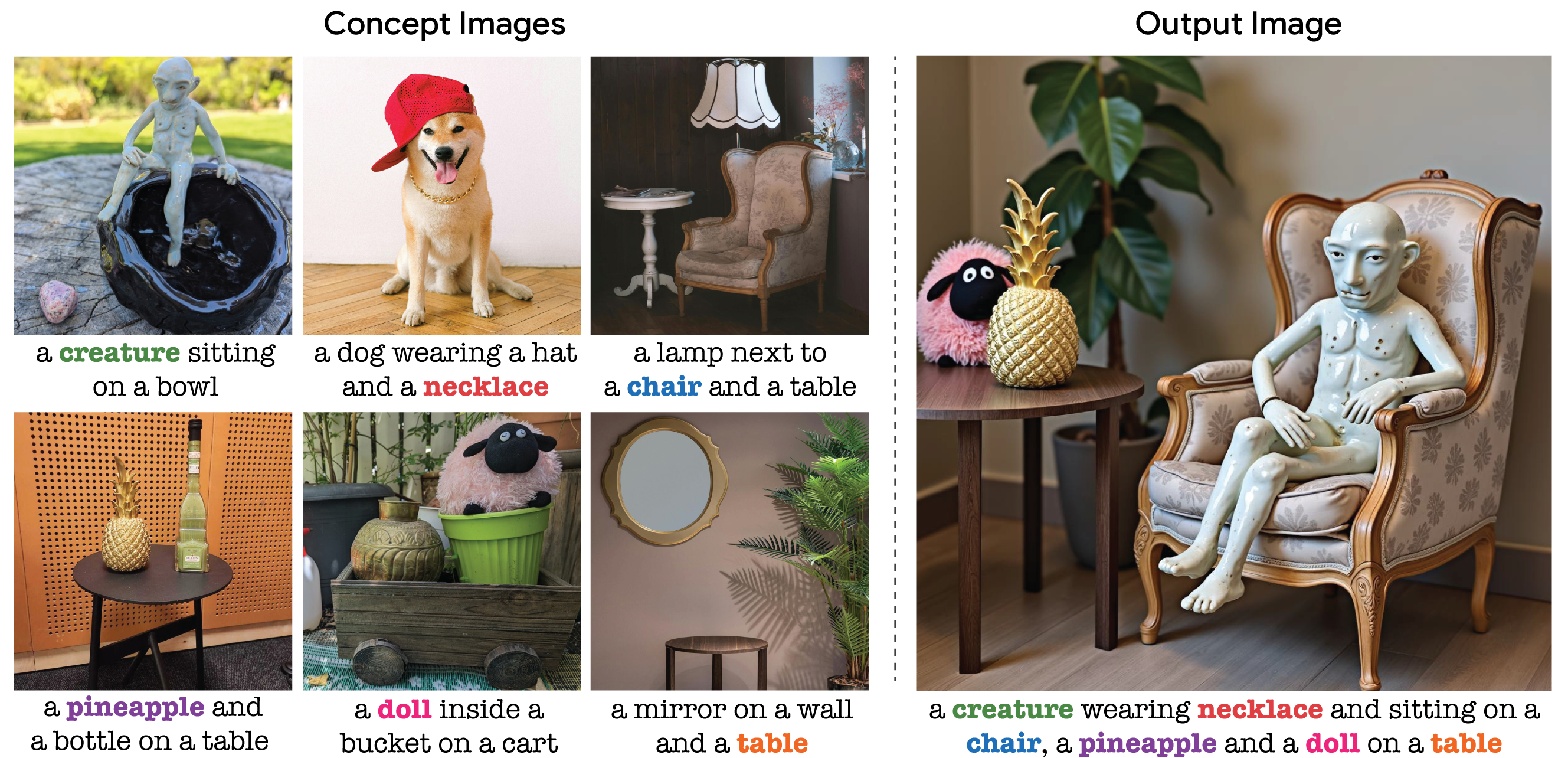

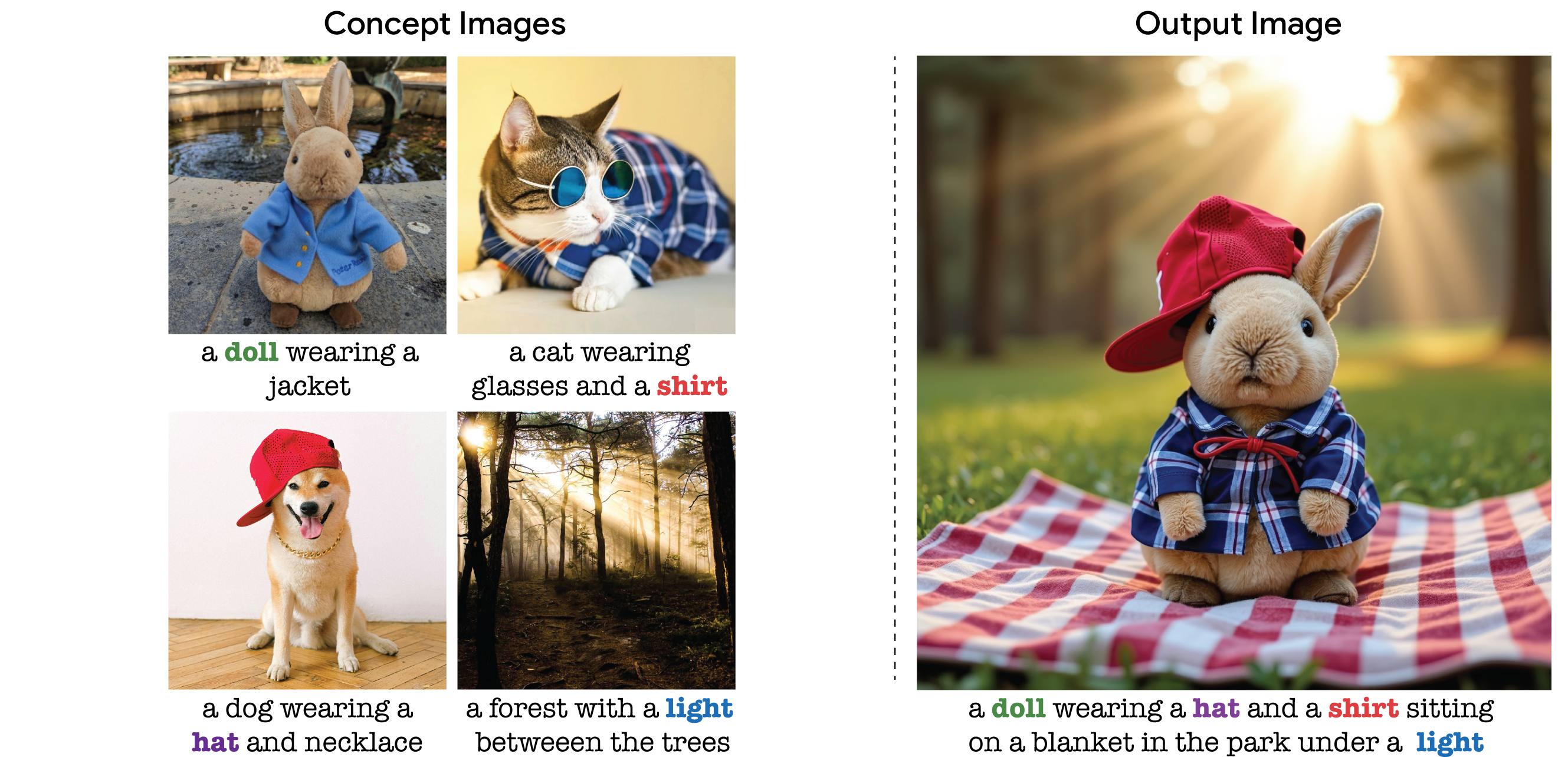

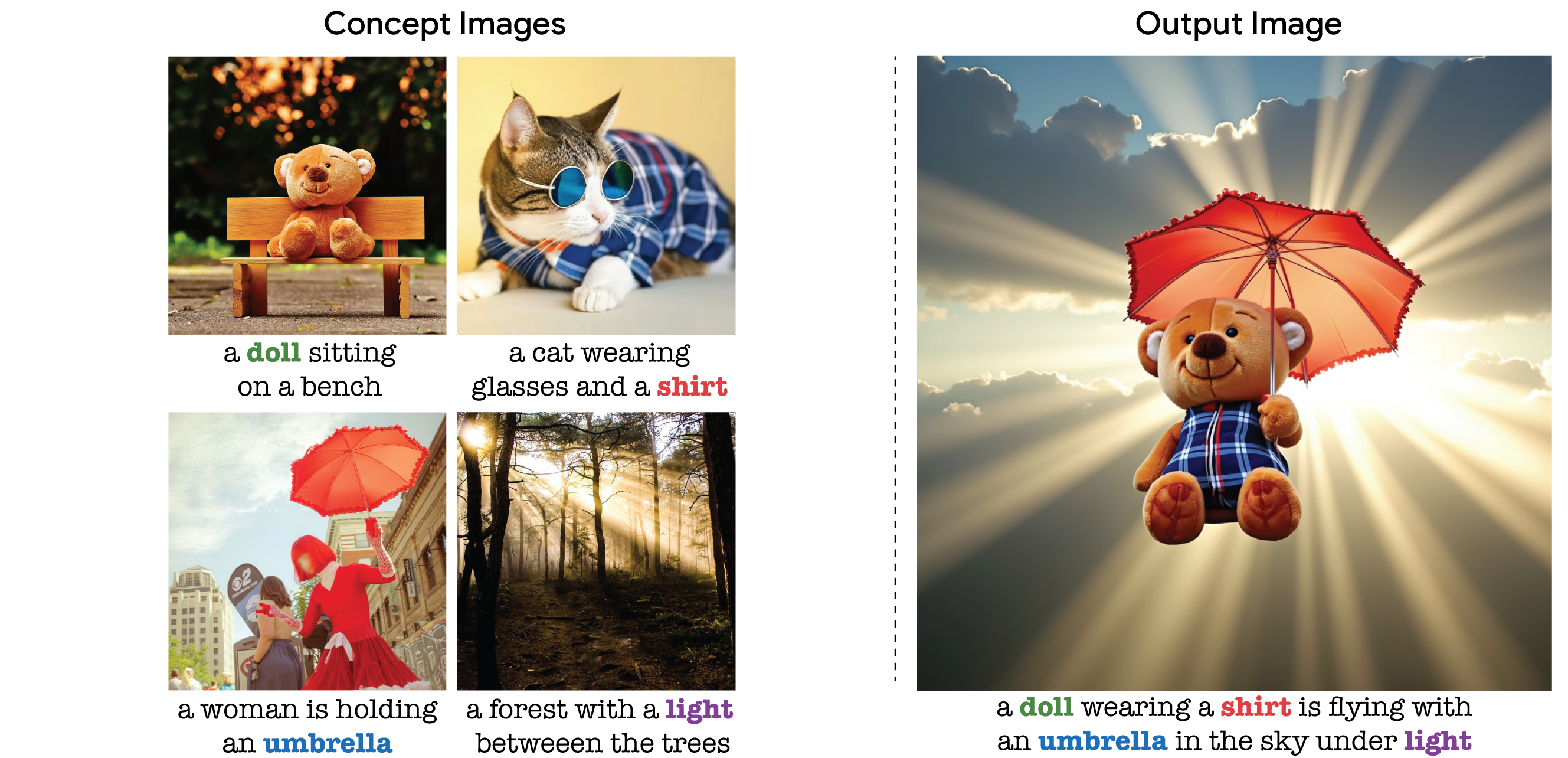

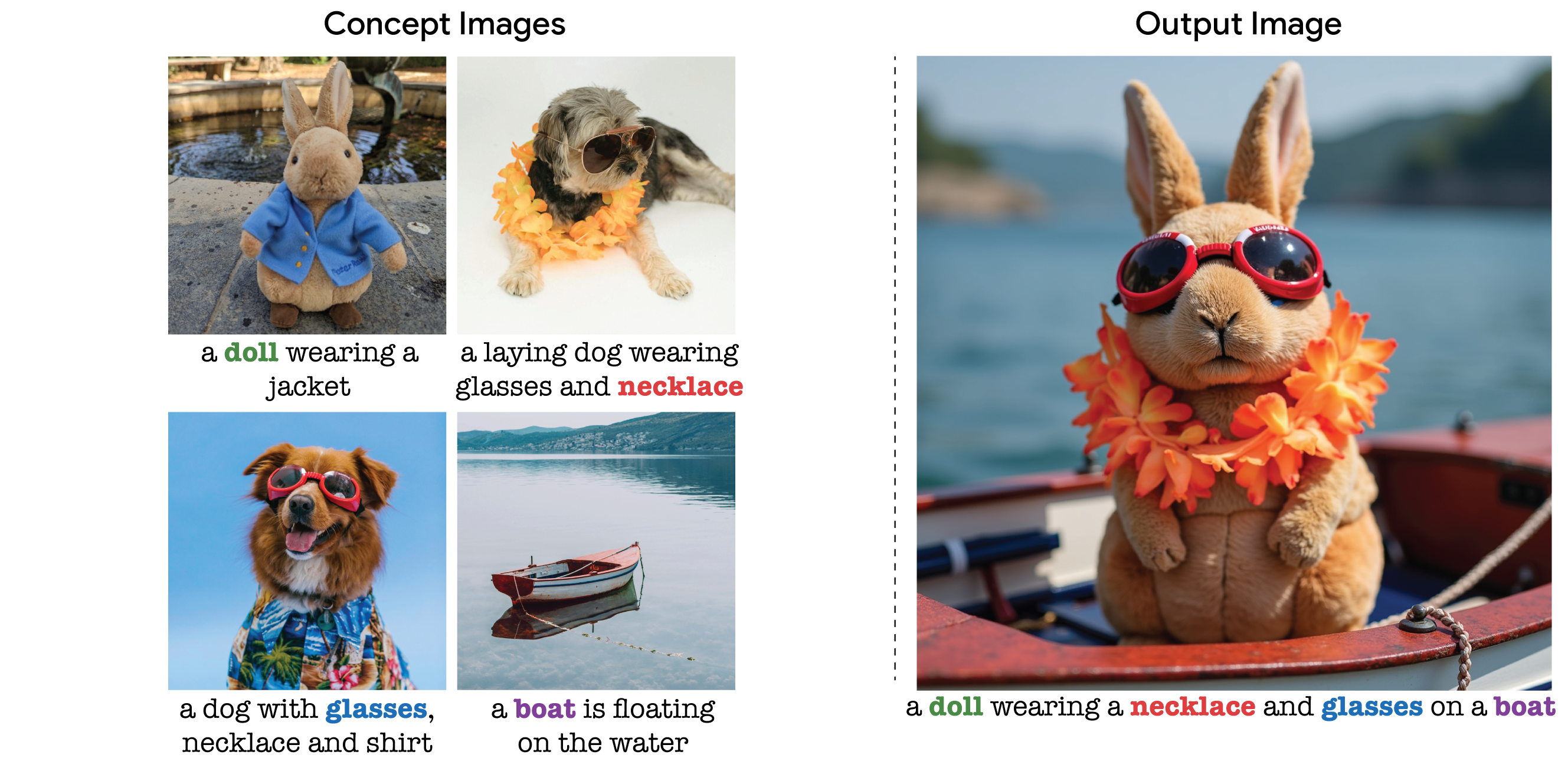

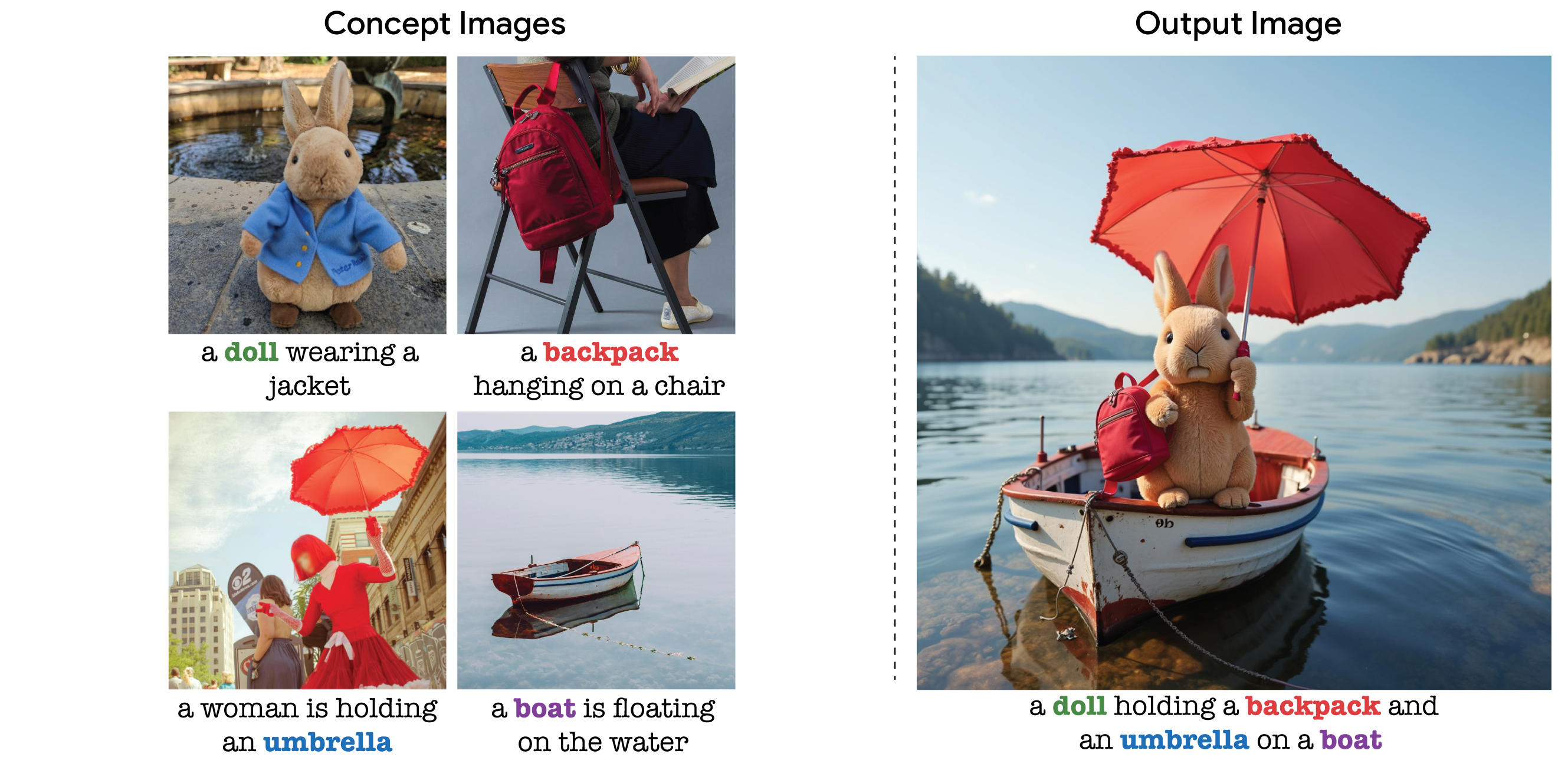

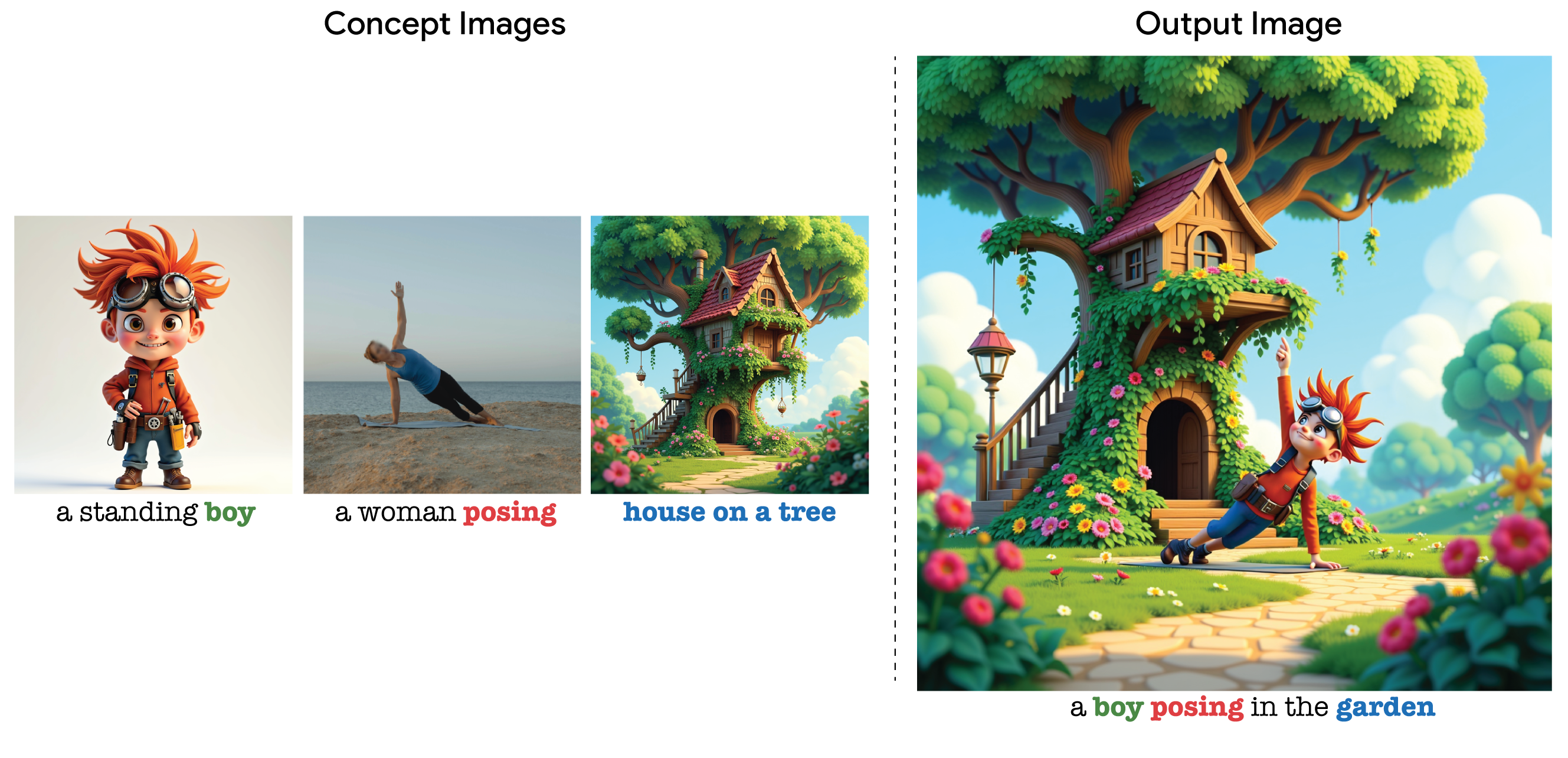

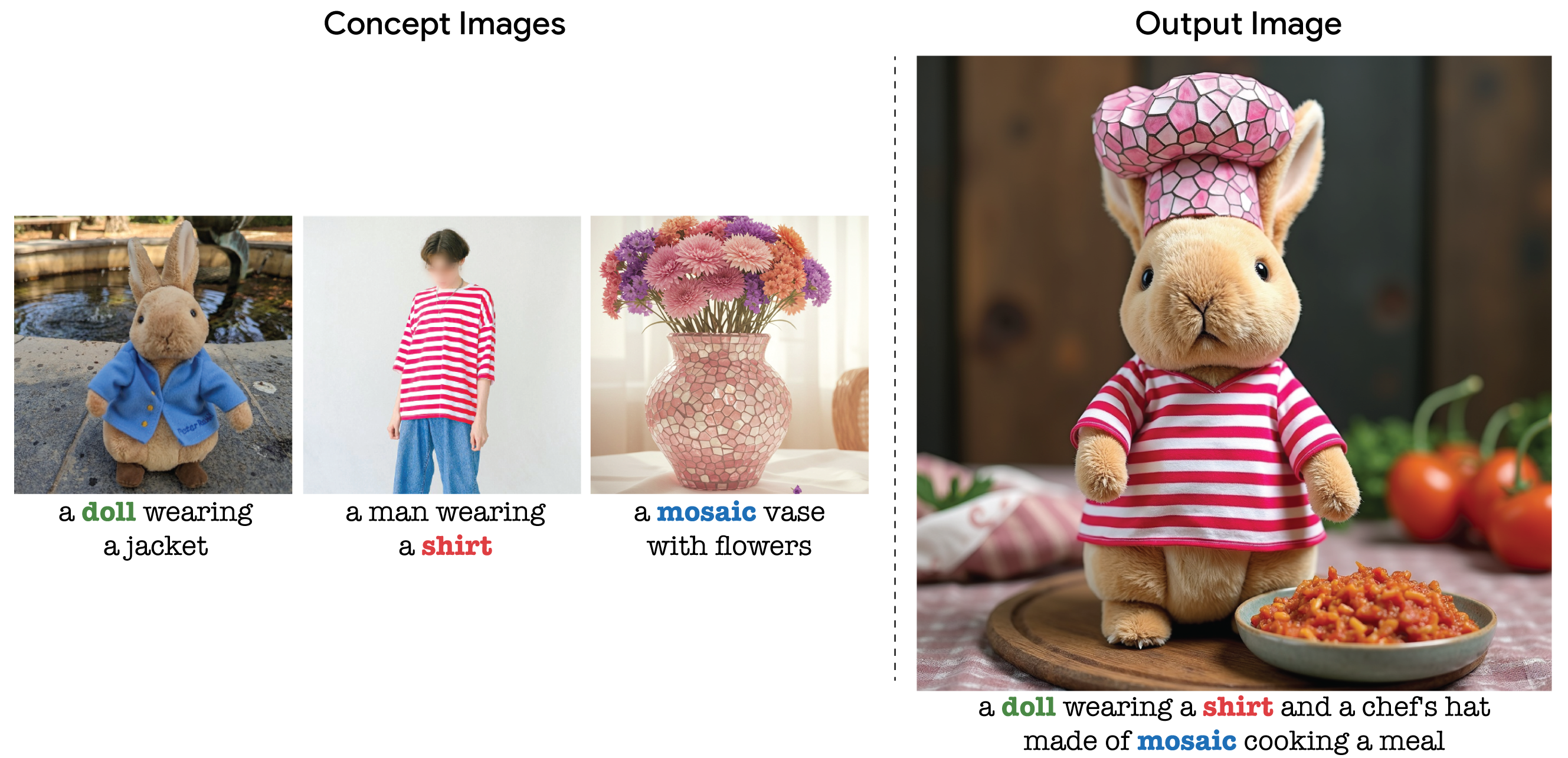

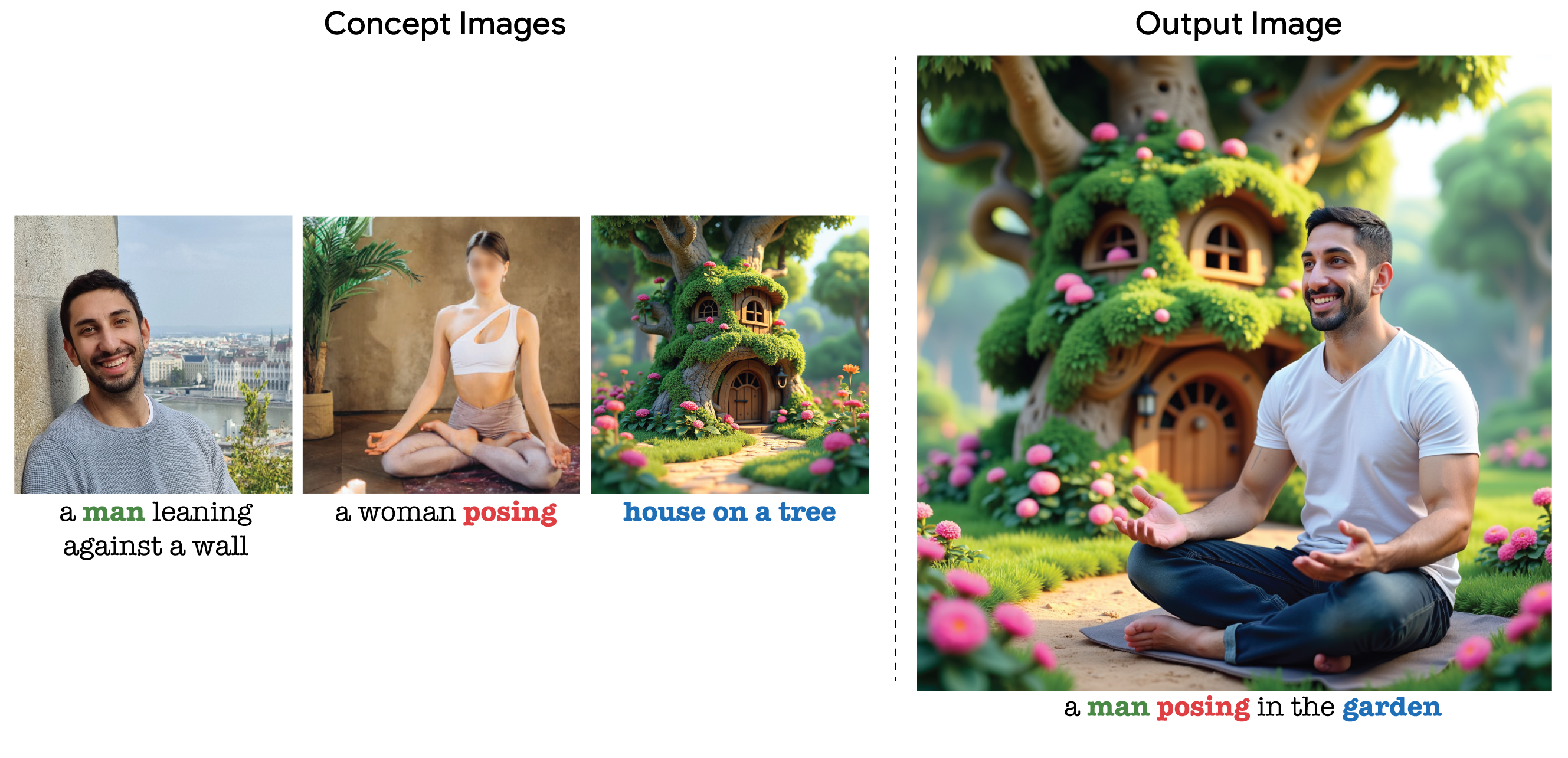

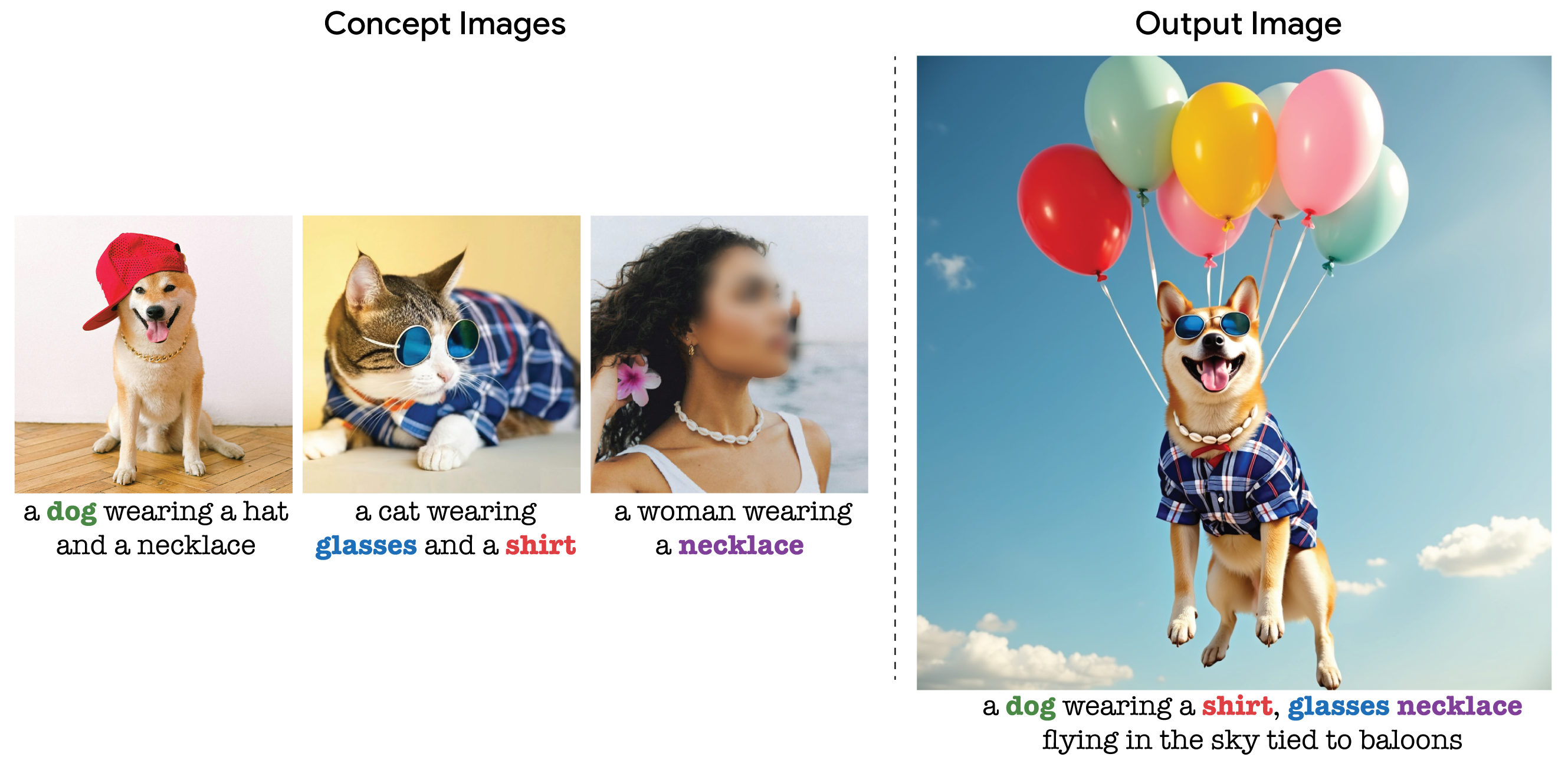

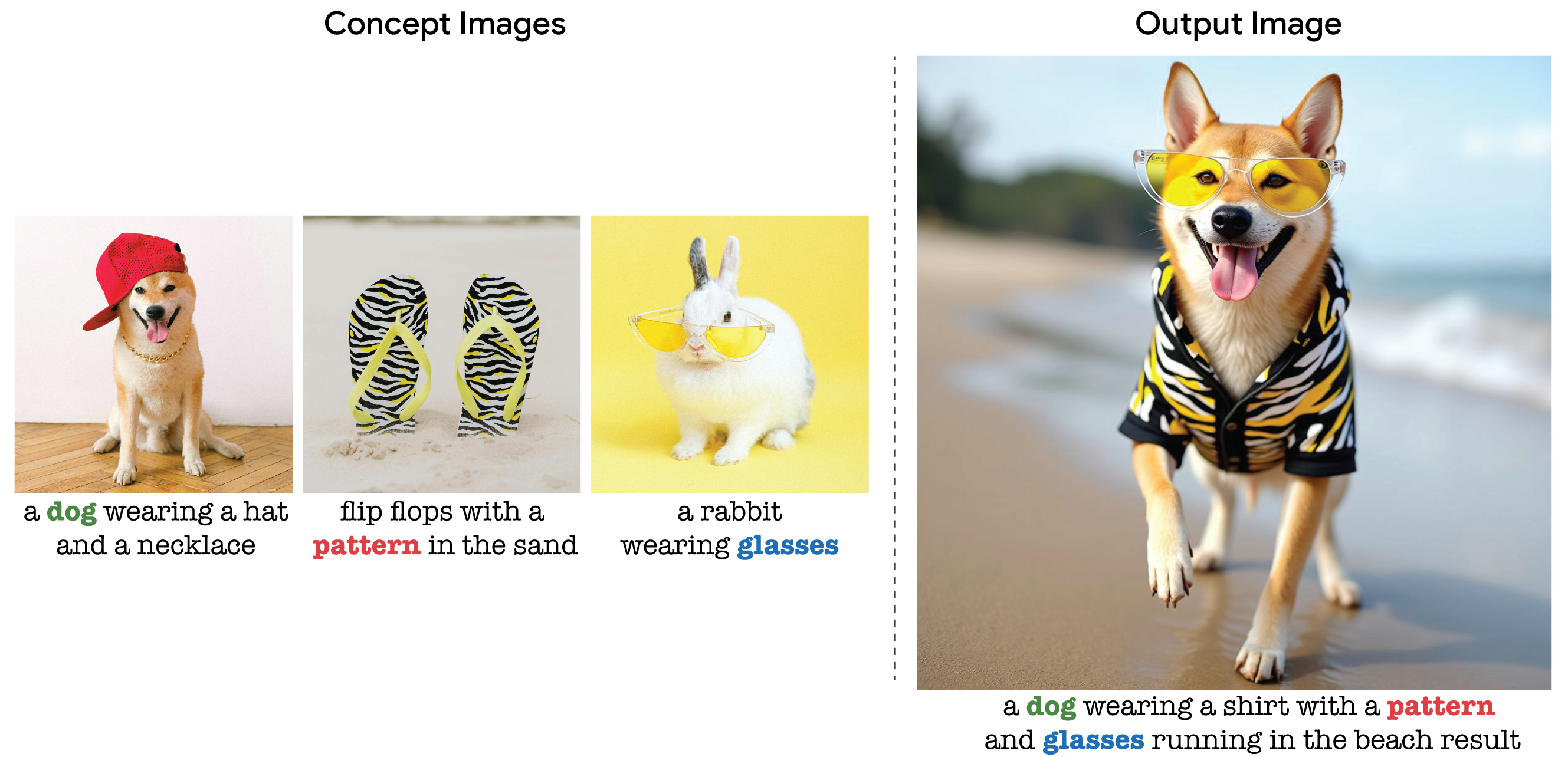

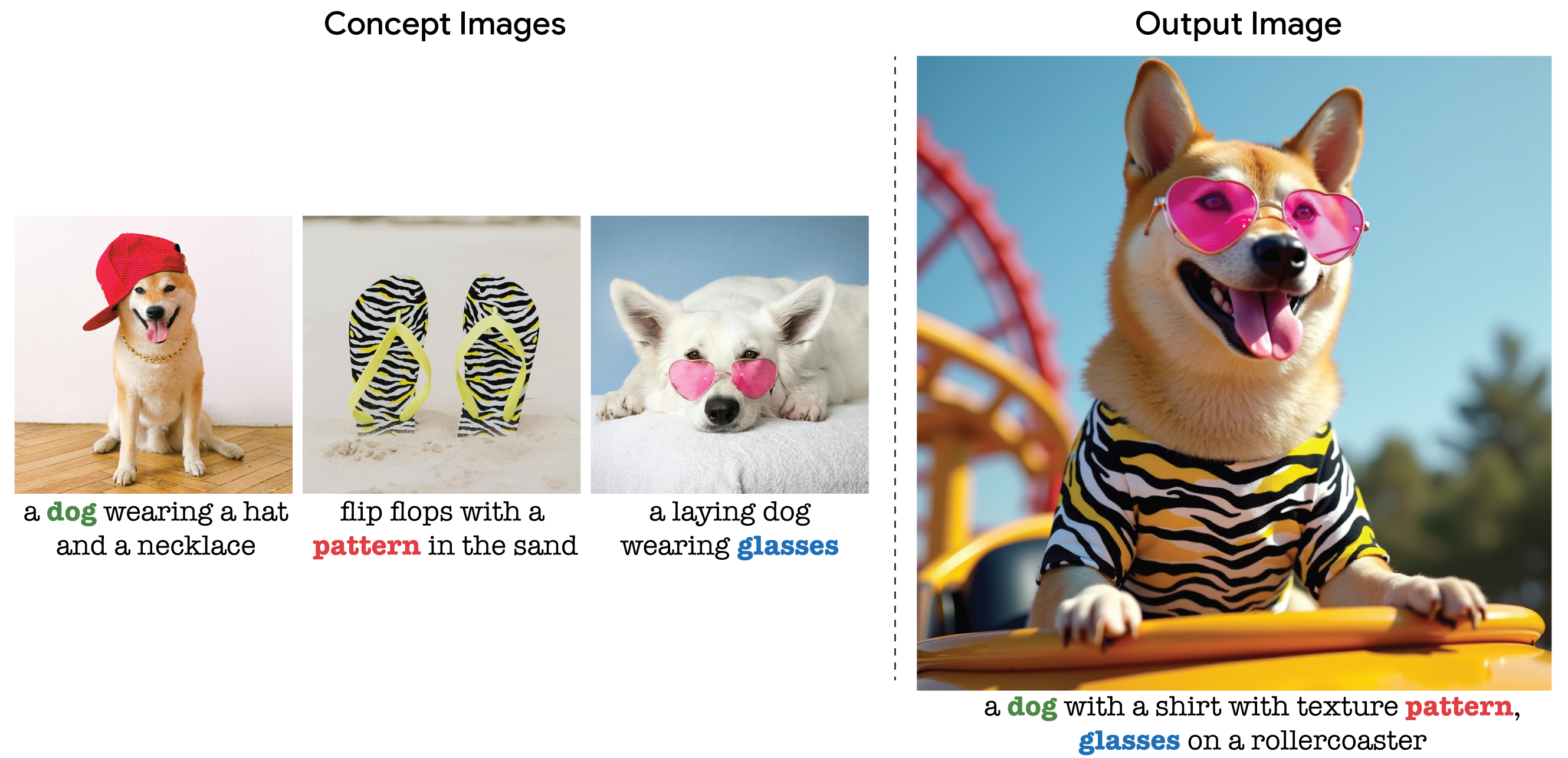

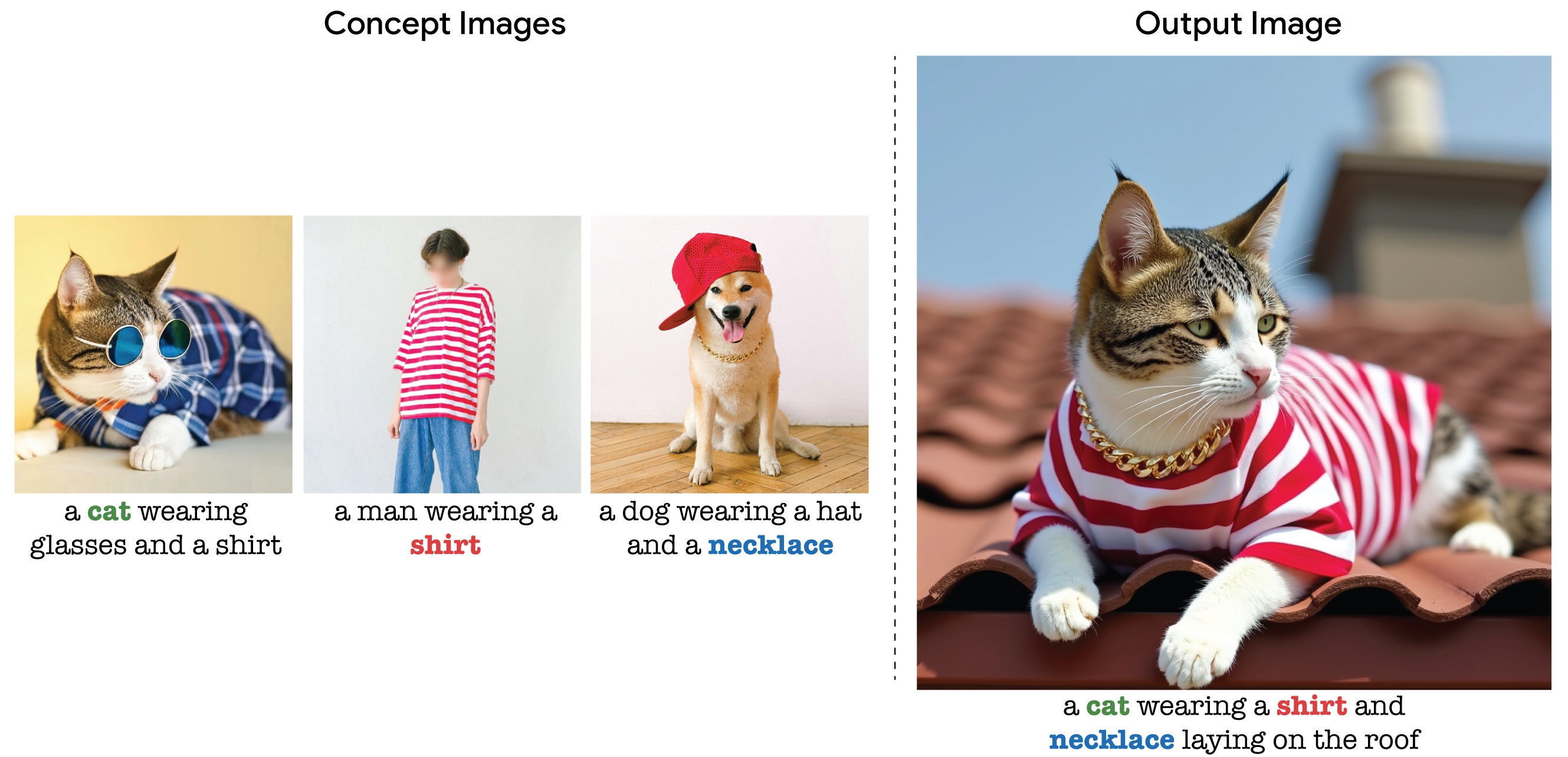

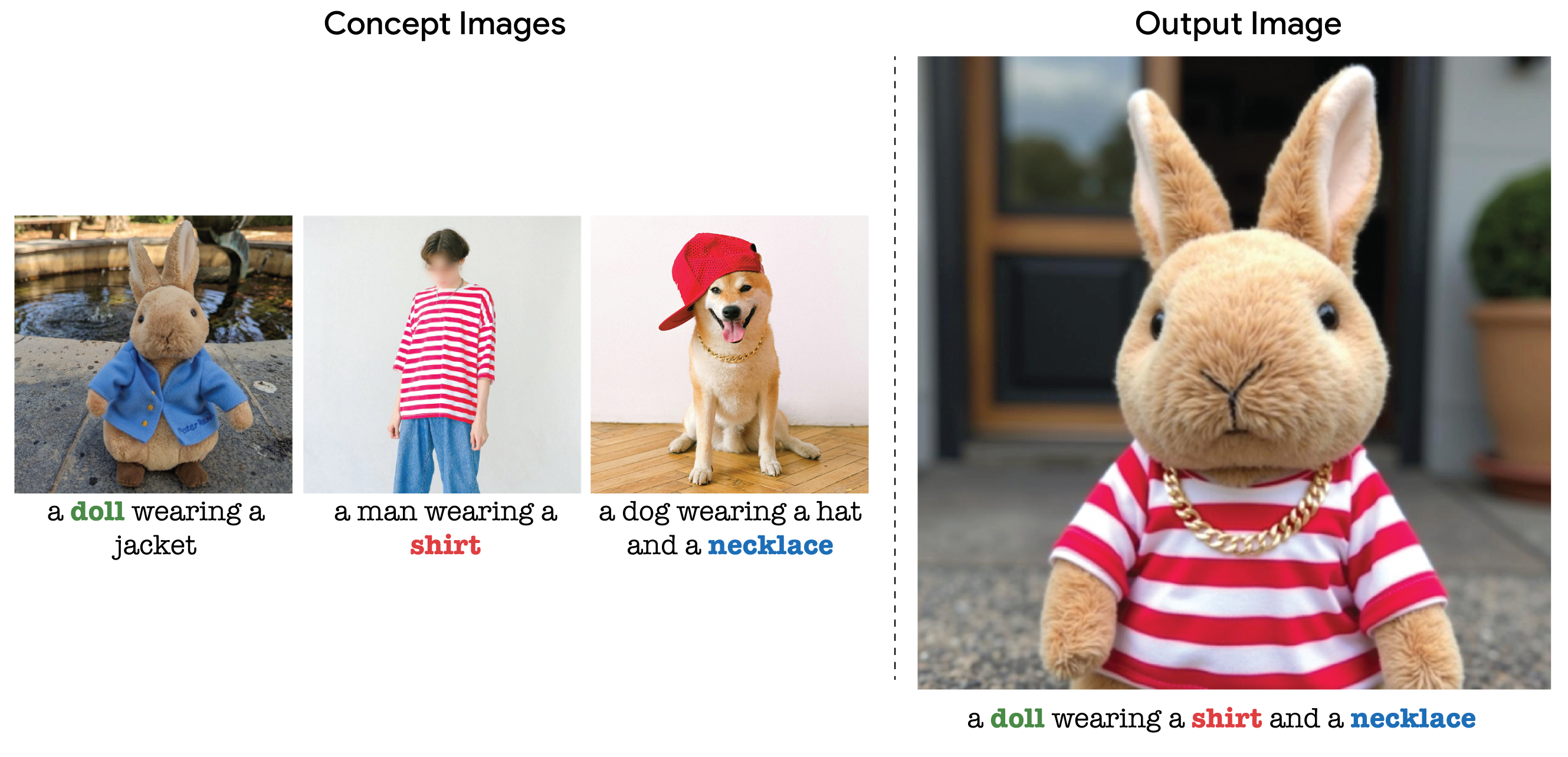

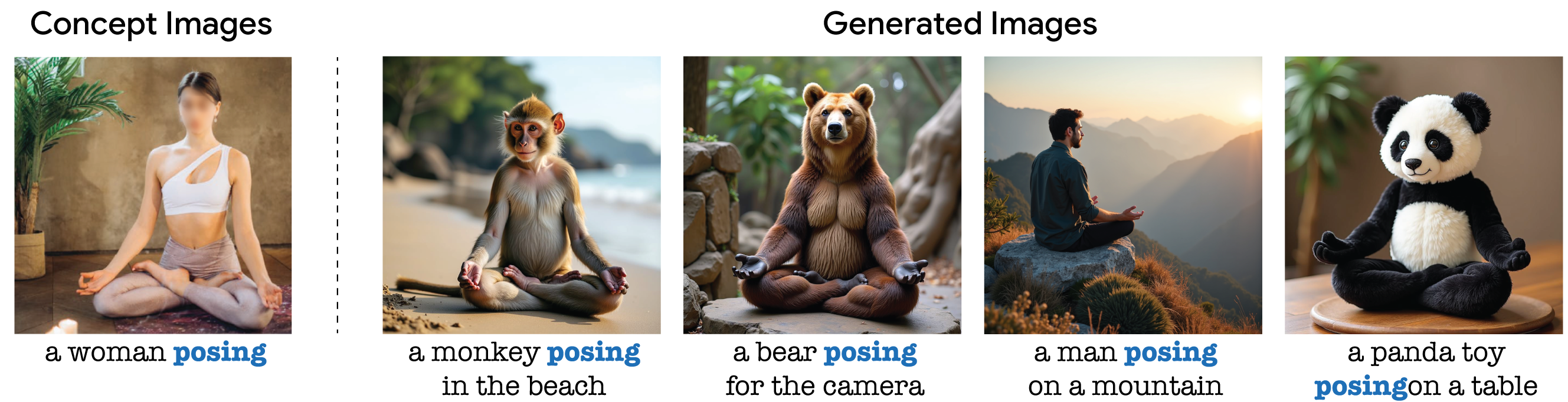

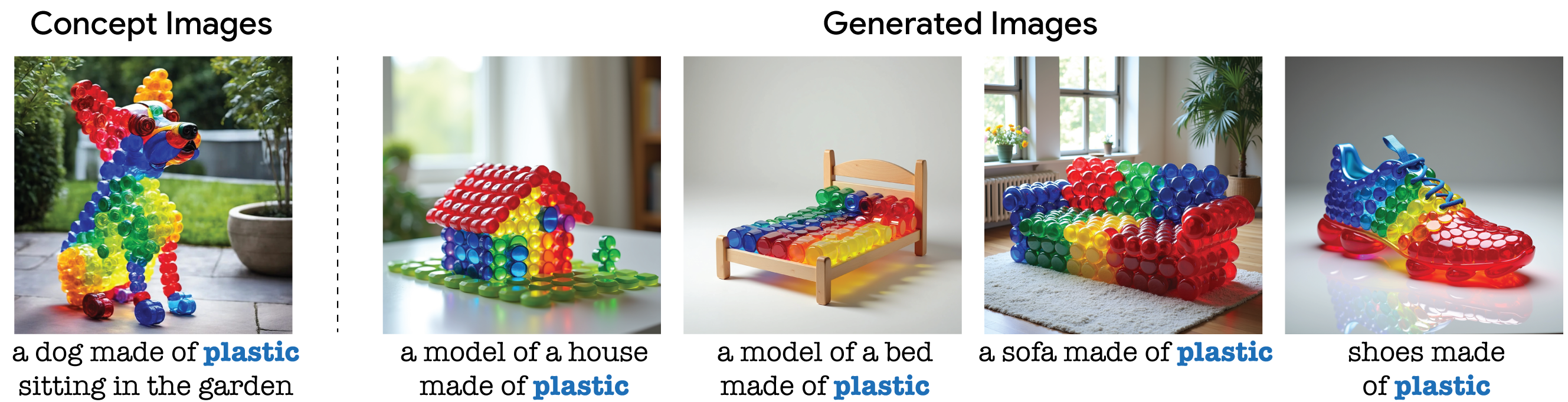

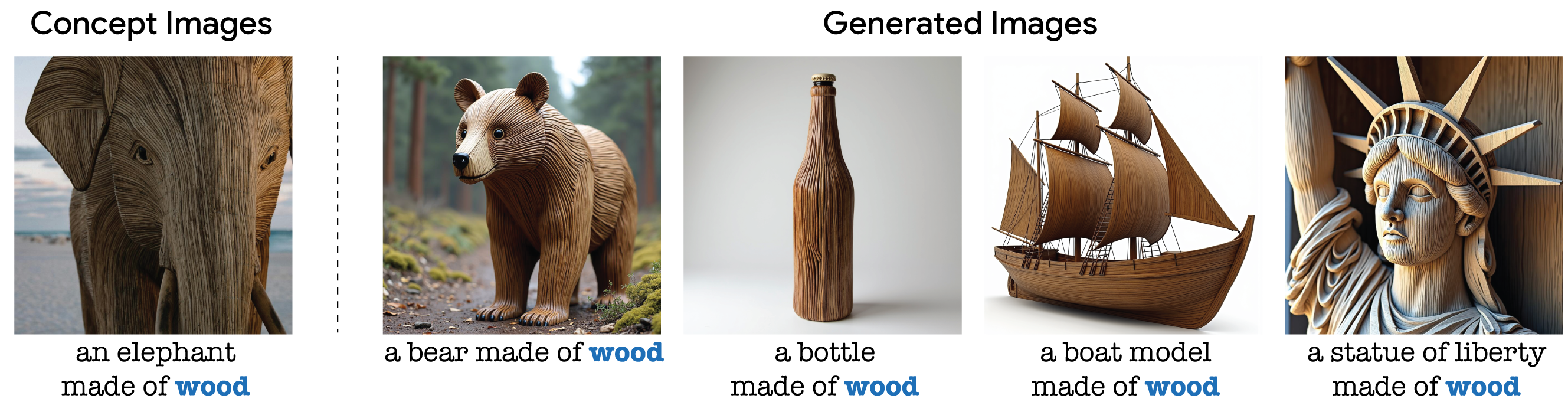

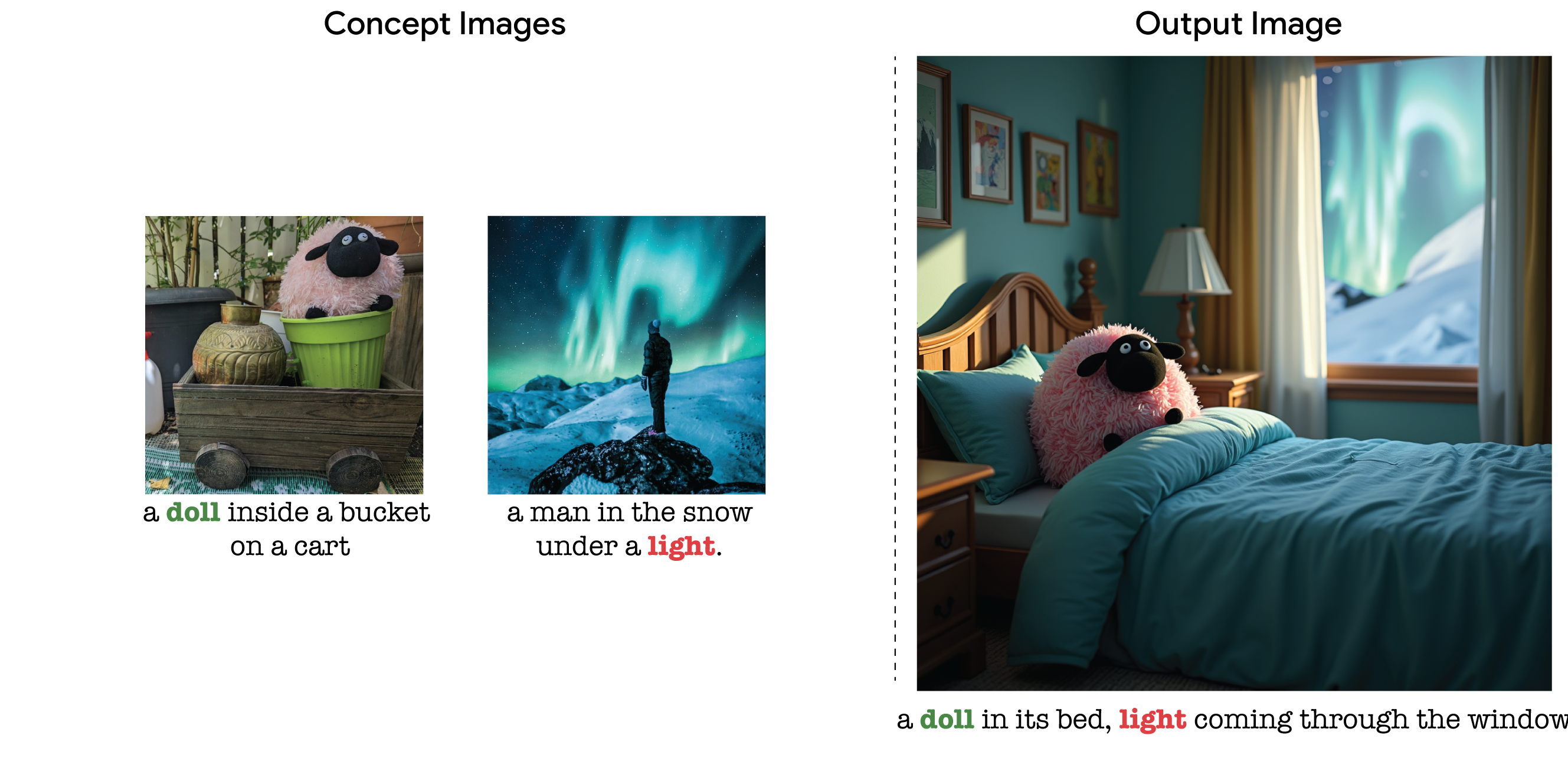

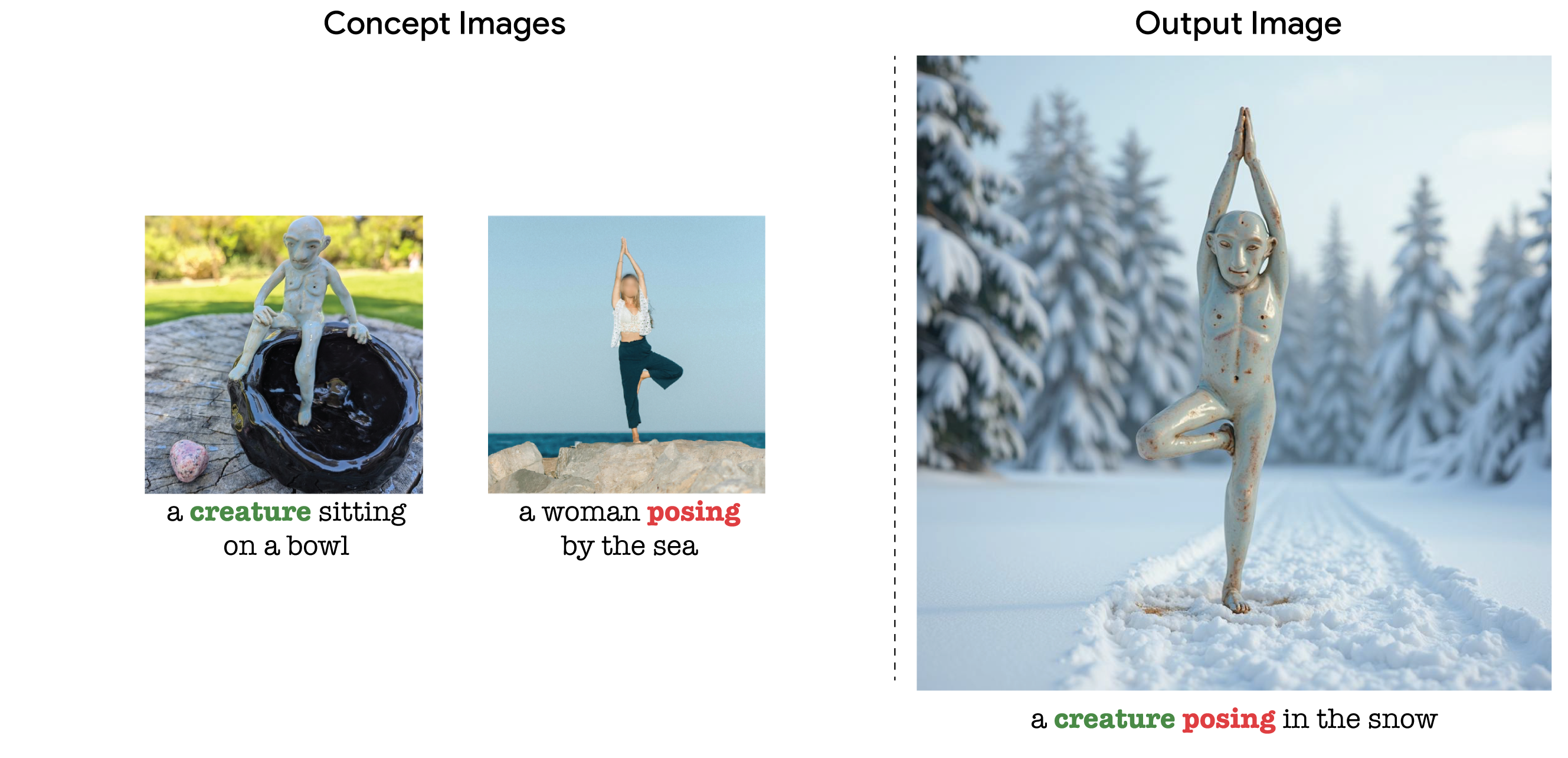

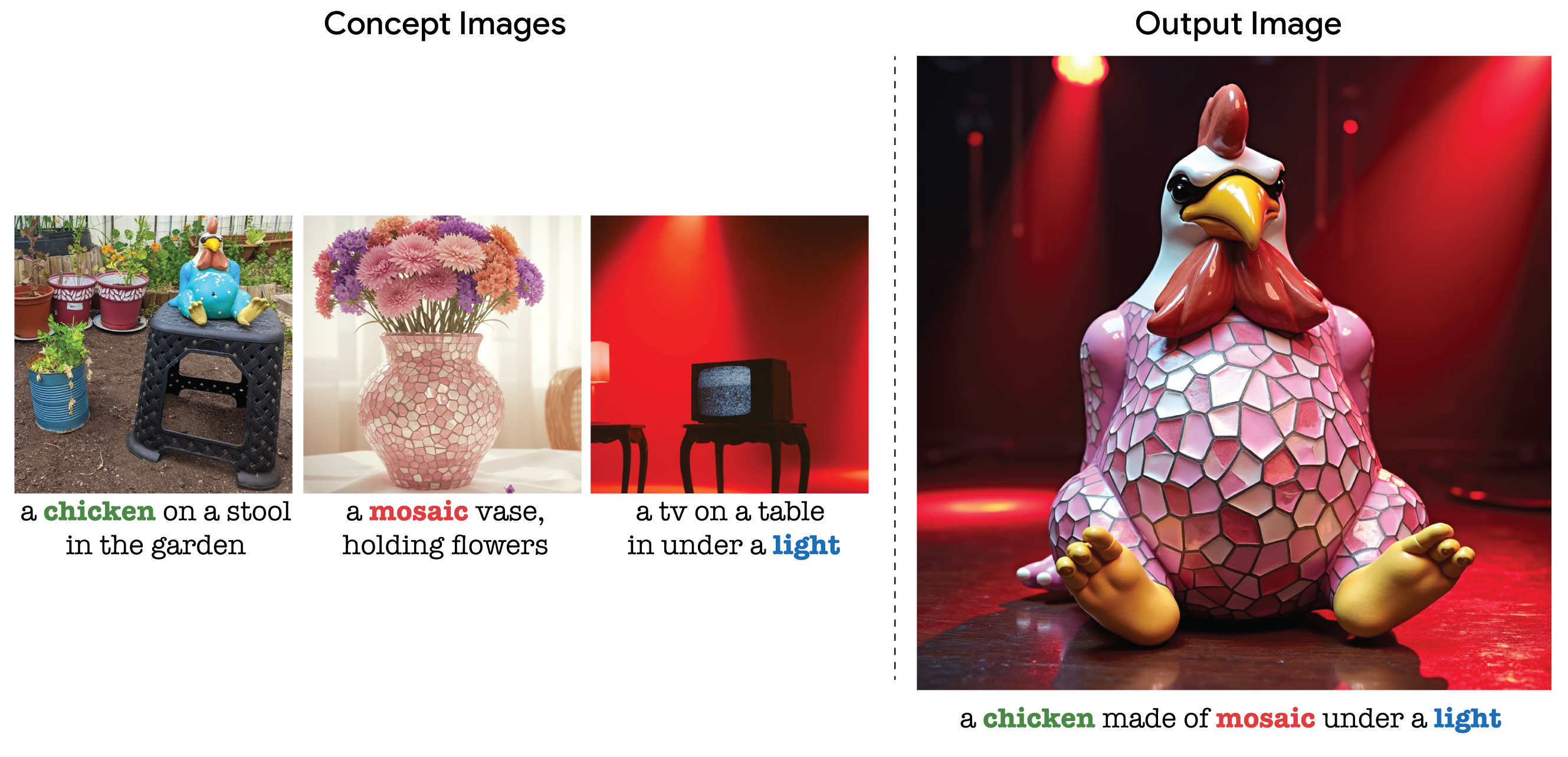

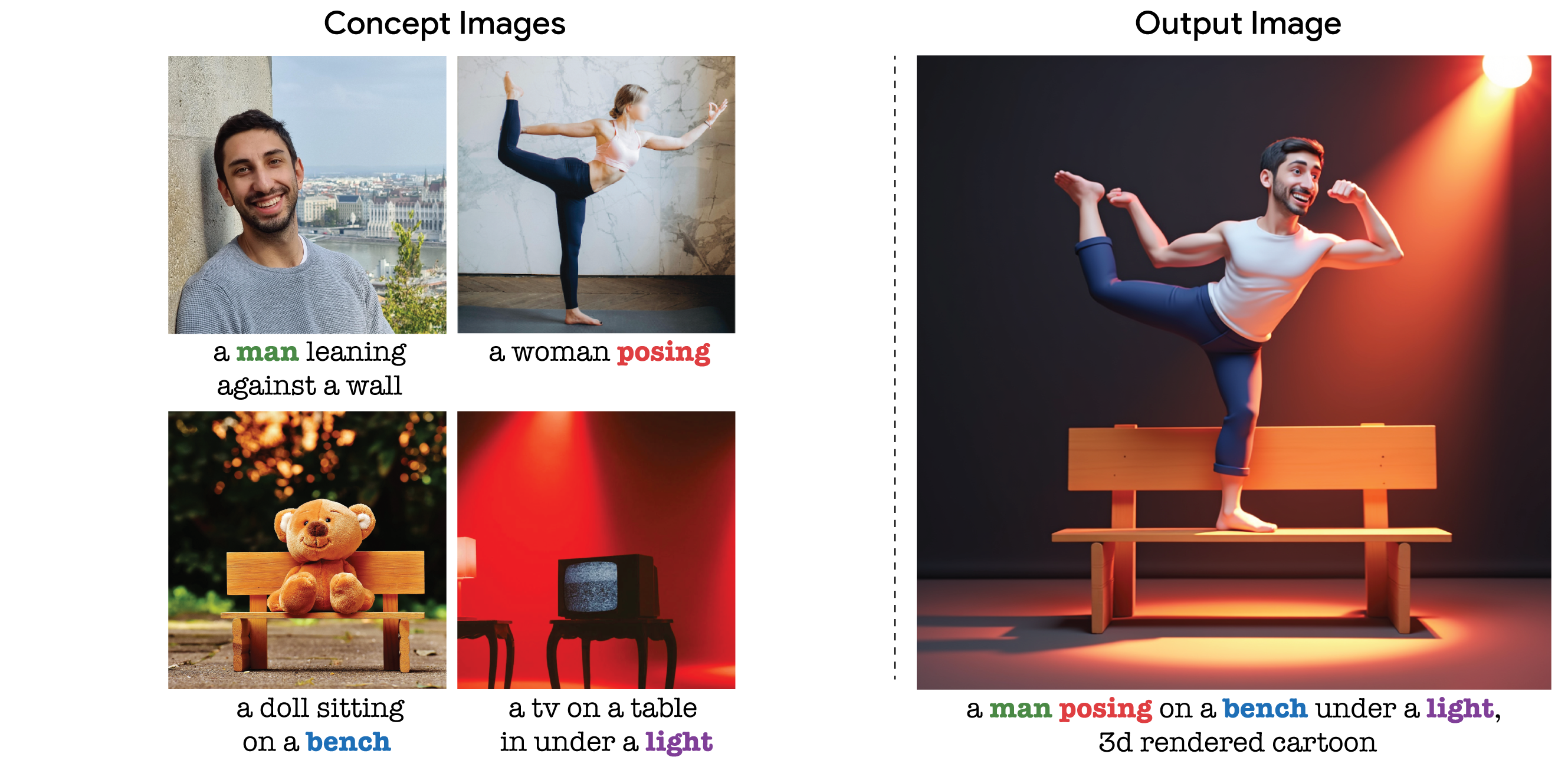

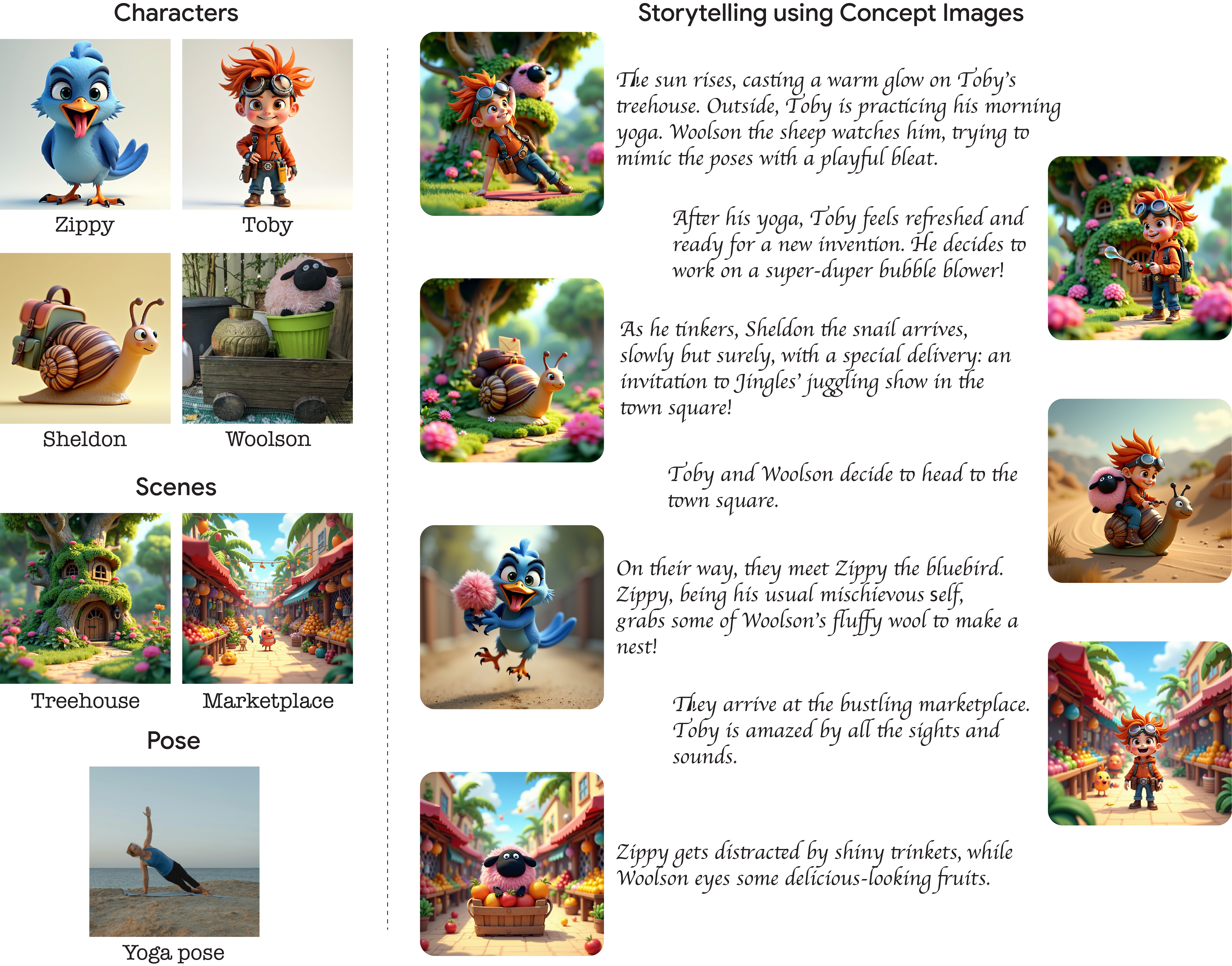

Results

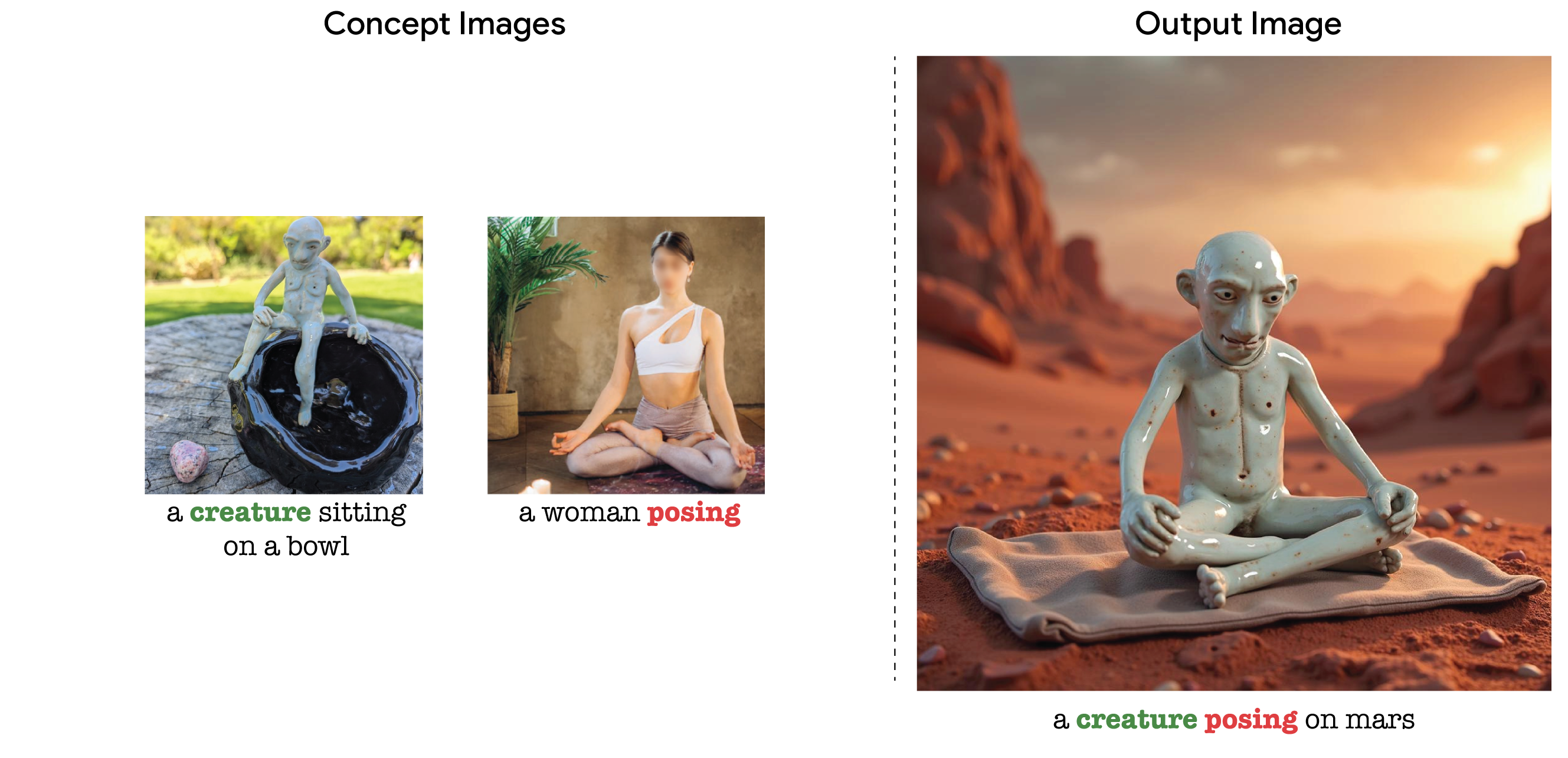

We present TokenVerse -- a method for multi-concept personalization, leveraging a pre-trained text-to-image diffusion model. Our framework can disentangle complex visual elements and attributes from as little as a single image, while enabling seamless plug-and-play generation of combinations of concepts extracted from multiple images. As opposed to existing works, which are restricted either in the type or breadth of concepts they can handle, TokenVerse can handle multiple images with multiple concepts each, and supports a wide-range of concepts, including objects, accessories, materials, pose, and lighting. Our work exploits a DiT-based text-to-image model, in which the input text affects the generation through both attention and modulation (shift and scale). We observe that the modulation space is semantic and enables localized control over complex concepts. Building on this insight, we devise an optimization-based framework that takes as input an image and a text description, and finds for each word a distinct direction in modulation space. These directions can then be used to generate new images that combine the learned concepts in a desired configuration. We demonstrate the effectiveness of TokenVerse in challenging personalization settings, and showcase its advantages over existing methods.

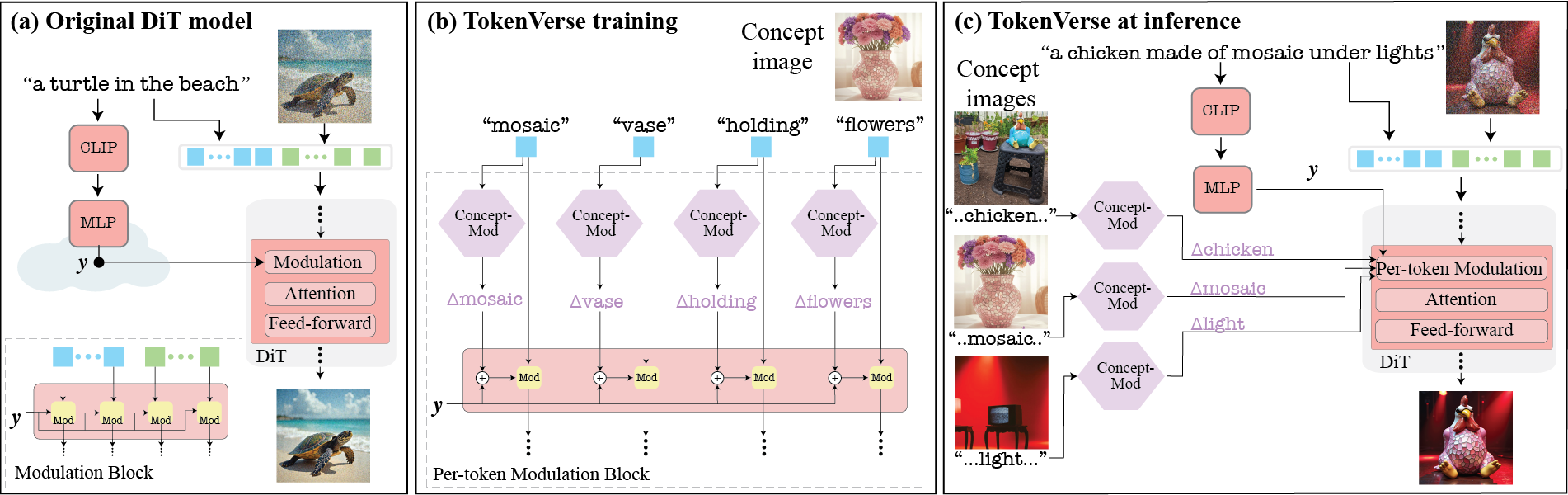

Our method leverages the exisitng modulation mechanism in DiT models to extract concept specific information from user provided images.

(a) A pre-trained text-to-image DiT model processes both image and text tokens via a series of DiT blocks. Each block consists of modulation, attention and feed-forward modules. We focus on the modulation block, in which the tokens are modulated via a vector y, which is derived from a pooled text embedding.

(b) In our TokenVerse method, given a concept image and its corresponding caption, we learn a personalized modulation vector adjustment delta for each text embedding. These adjustments represent personalized directions in the modulation space and are learned using a simple reconstruction objective.

(c) At inference, the pre-learned direction vectors are added to the text embeddings, enabling injection of personalized concepts into the generated images.

@misc{garibi2025tokenverseversatilemulticonceptpersonalization,

title={TokenVerse: Versatile Multi-concept Personalization in Token Modulation Space},

author={Daniel Garibi and Shahar Yadin and Roni Paiss and Omer Tov and Shiran Zada and Ariel Ephrat and Tomer Michaeli and Inbar Mosseri and Tali Dekel},

year={2025},

eprint={2501.12224},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2501.12224},

}